Introduction

The rapid evolution of AI tools in sales has made API reliability a critical factor for businesses looking to enhance their lead generation strategies. Essential metrics like uptime, response time, and error rates are not just numbers; they are the backbone of operational efficiency and customer satisfaction. But with so many benchmarks to consider, how can companies ensure they are not only meeting but exceeding these standards? This article delves into ten vital API reliability benchmarks that empower businesses to optimize their sales processes and secure high-quality leads.

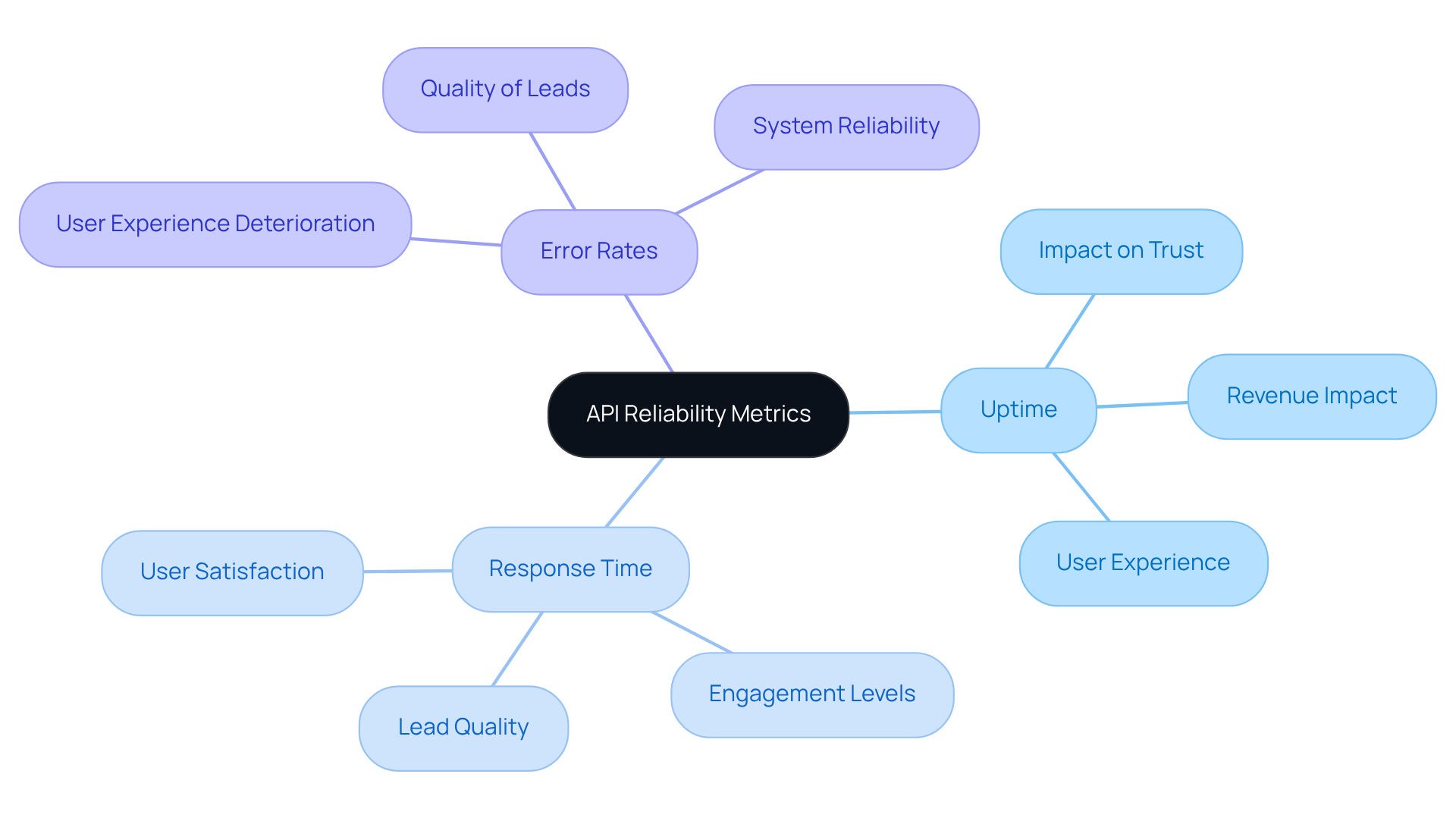

Websets: API Reliability Metrics for Enhanced Lead Generation

Websets stands at the forefront of lead generation by utilizing API reliability benchmarks for AI tools. With a keen focus on uptime, response time, and error rates, it ensures that businesses can trust its platform. Uptime, the percentage of time the API is fully functional, is crucial; even a minor dip can lead to significant downtime, eroding trust and impacting revenue. Meanwhile, response time measures how quickly the API processes requests - shorter times correlate with greater satisfaction and engagement. Error rates track the frequency of failed requests, which can severely diminish user experience and the quality of leads generated.

By implementing continuous monitoring of these metrics, Websets guarantees a dependable and efficient platform that meets API reliability benchmarks for AI tools, empowering users to secure high-quality leads. But that's not all. Websets' AI-powered sales intelligence search allows users to pinpoint companies and individuals that meet hyper-specific criteria. This feature enriches searches with detailed information, including emails, company details, and positions.

This powerful combination of API reliability benchmarks for AI tools and advanced AI capabilities positions Websets as a leader in delivering precise solutions for sales and recruitment. Its flexibility and scalability also cater to enterprise-level needs, making it an invaluable tool for professionals seeking to enhance their lead generation efforts. Are you ready to elevate your sales strategy with Websets?

DreamFactory: Performance Under Pressure for Real-Time AI Workloads

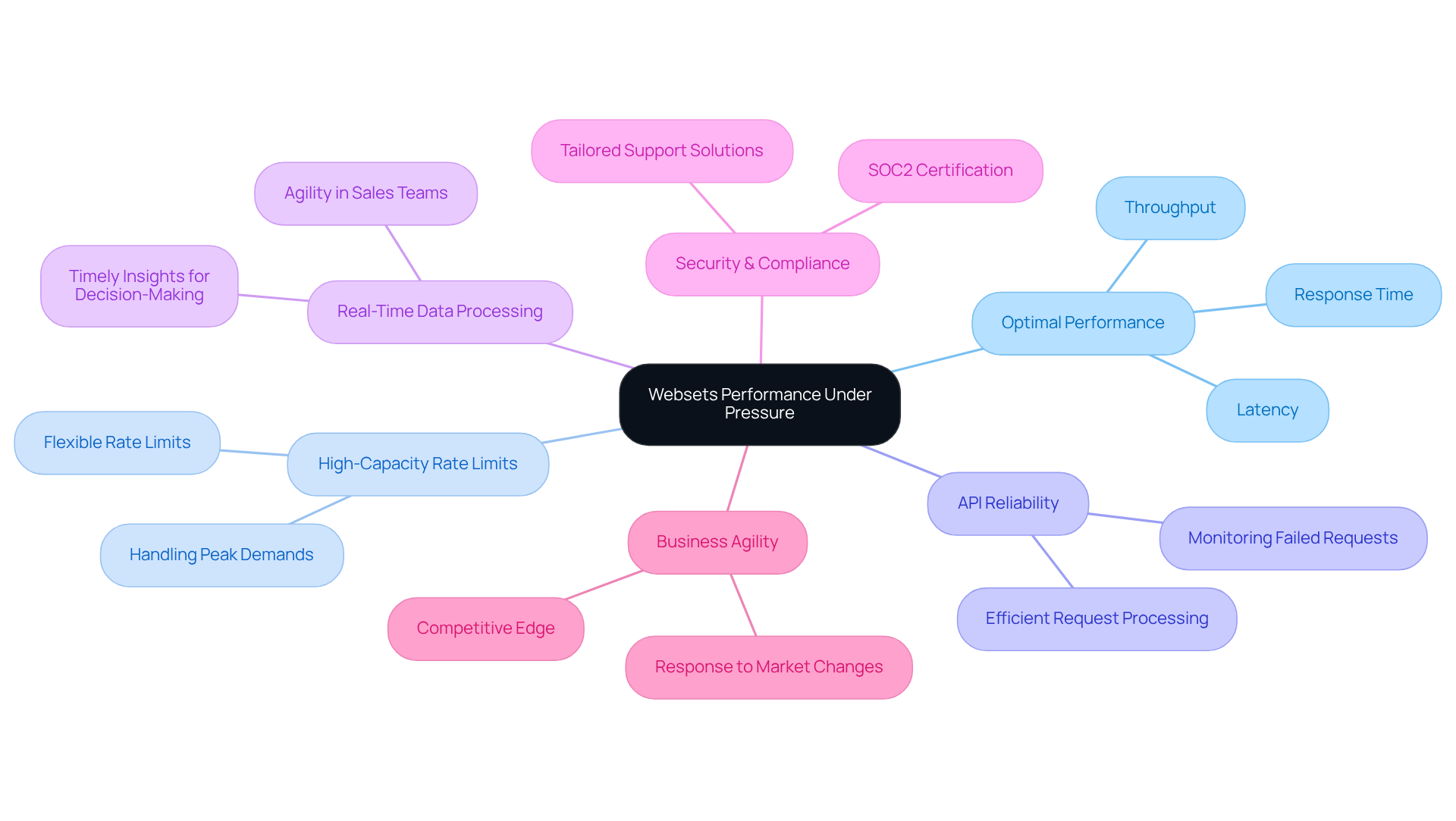

Websets stands out by maintaining optimal performance even during high-load scenarios. With flexible, high-capacity rate limits, it effectively handles peak enterprise demands. This capability ensures that API requests are processed efficiently, which is crucial for achieving API reliability benchmarks for AI tools while preserving the quality of service that businesses rely on.

For companies dependent on real-time data for decision-making - especially in sales and marketing - timely insights can significantly impact results. Websets not only meets these demands but also demonstrates a strong commitment to security and compliance, boasting SOC2 certification and tailored support solutions.

By managing real-time data processing effectively, Websets empowers sales teams to respond swiftly to market changes. This agility enhances their competitive edge, allowing them to stay ahead in a fast-paced environment. Are you ready to elevate your business performance with Websets?

Evaluating AI Tools: Key Reliability Benchmarks for Performance Assessment

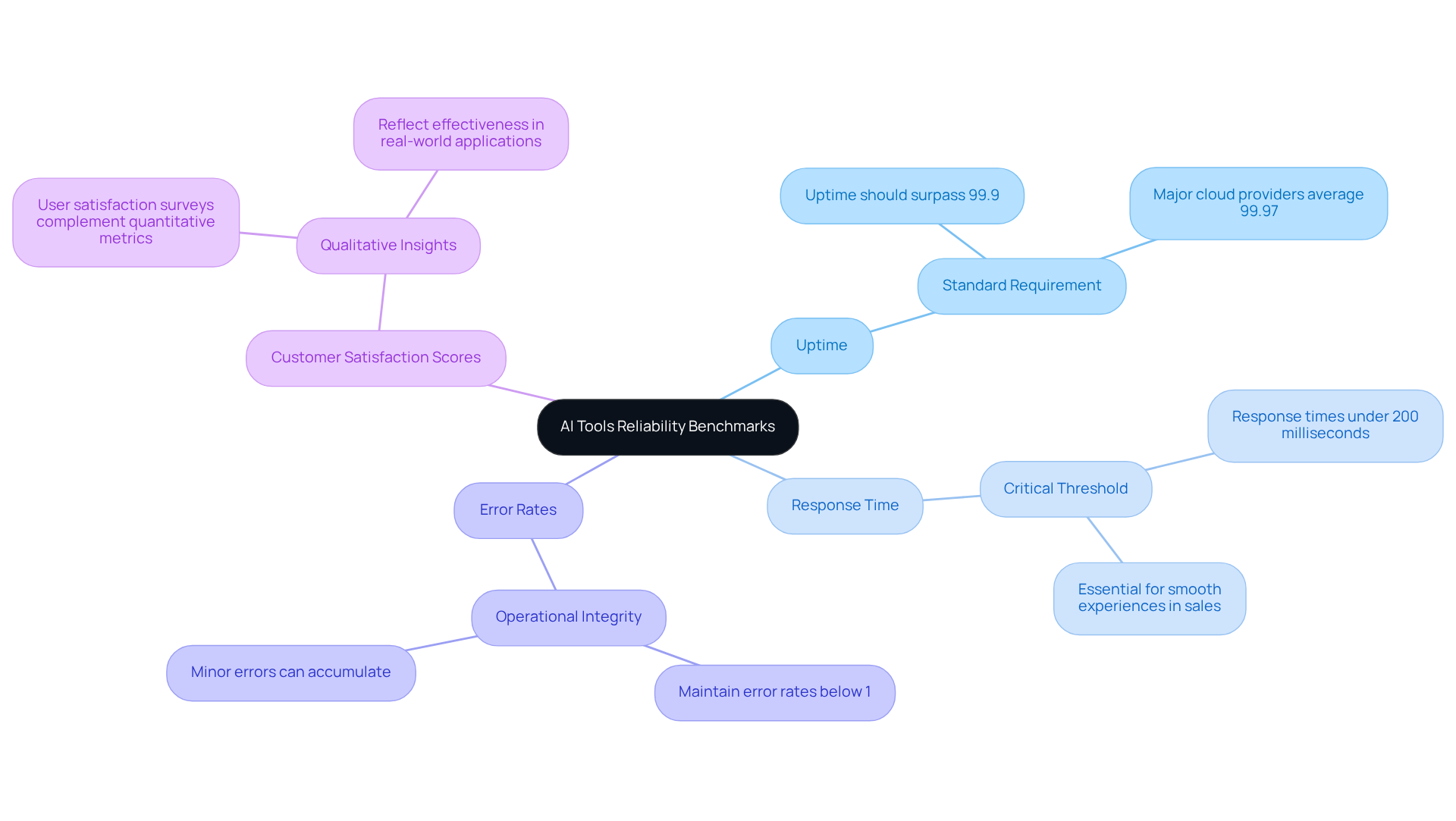

When evaluating AI tools, organizations must prioritize several essential API reliability benchmarks for AI tools, including uptime, response time, error rates, and customer satisfaction scores. Uptime should ideally surpass 99.9% - a standard that reflects a commitment to service dependability pursued by leading organizations. For instance, major cloud providers have achieved an average uptime of approximately 99.97%, translating to minimal downtime annually.

Response times are equally critical. They should remain under 200 milliseconds to guarantee smooth experiences, particularly in sales environments where speed is paramount. Furthermore, maintaining error rates below 1% is essential for operational integrity; even minor errors can accumulate and significantly impact overall performance.

User satisfaction surveys complement these quantitative metrics, offering qualitative insights into the tool's effectiveness in real-world applications. By focusing on API reliability benchmarks for AI tools, organizations can enhance their operational efficiency and ensure their AI tools meet the high standards necessary for success in competitive markets. Are you ready to elevate your AI strategy?

Security and Compliance: Essential Benchmarks for API Reliability

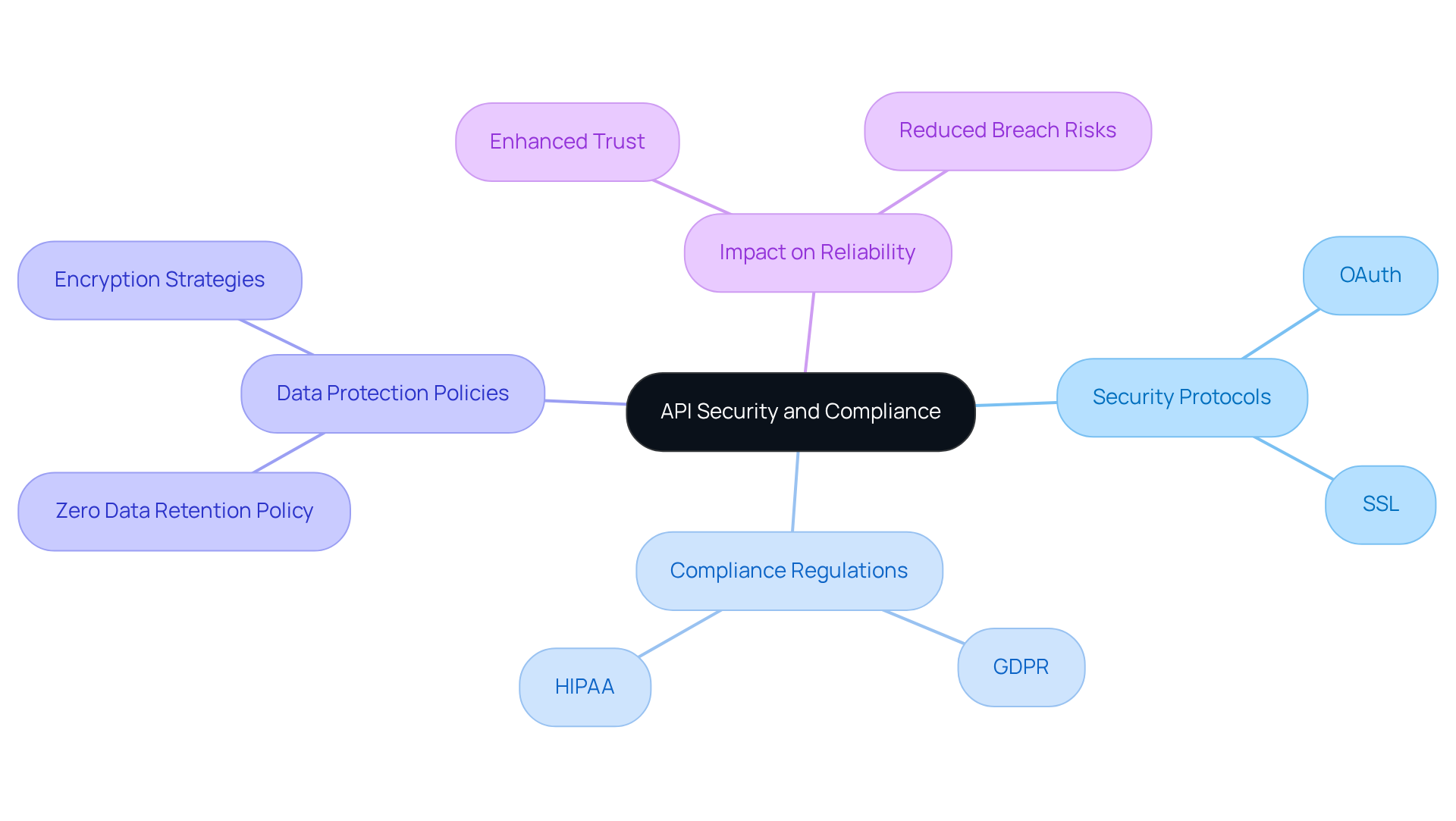

Security and compliance standards are essential for ensuring API reliability benchmarks for AI tools while safeguarding sensitive information. Websets' Zero Data Retention policy exemplifies this commitment, automatically purging all queries and data based on specific requirements. This not only reinforces our dedication to data security but also aligns with compliance mandates.

Implementing OAuth for authentication and SSL for data encryption is crucial. These protocols serve as robust defenses against unauthorized access and data breaches. Regular security audits are necessary to identify vulnerabilities, ensuring that APIs remain secure over time. Compliance with regulations such as GDPR and HIPAA is not merely a legal obligation; it’s a vital component of maintaining your organization’s reputation. Non-compliance can lead to severe penalties and reputational damage.

Consider organizations that have successfully integrated OAuth and SSL into their API security frameworks. They report enhanced data protection and improved trust among users. By adhering to these standards, along with our customized support solutions and enterprise data processing agreements, businesses can significantly bolster their API reliability benchmarks for AI tools and ensure the secure handling of sensitive information.

Are you ready to elevate your API security? Embrace these standards today to protect your data and enhance your organizational credibility.

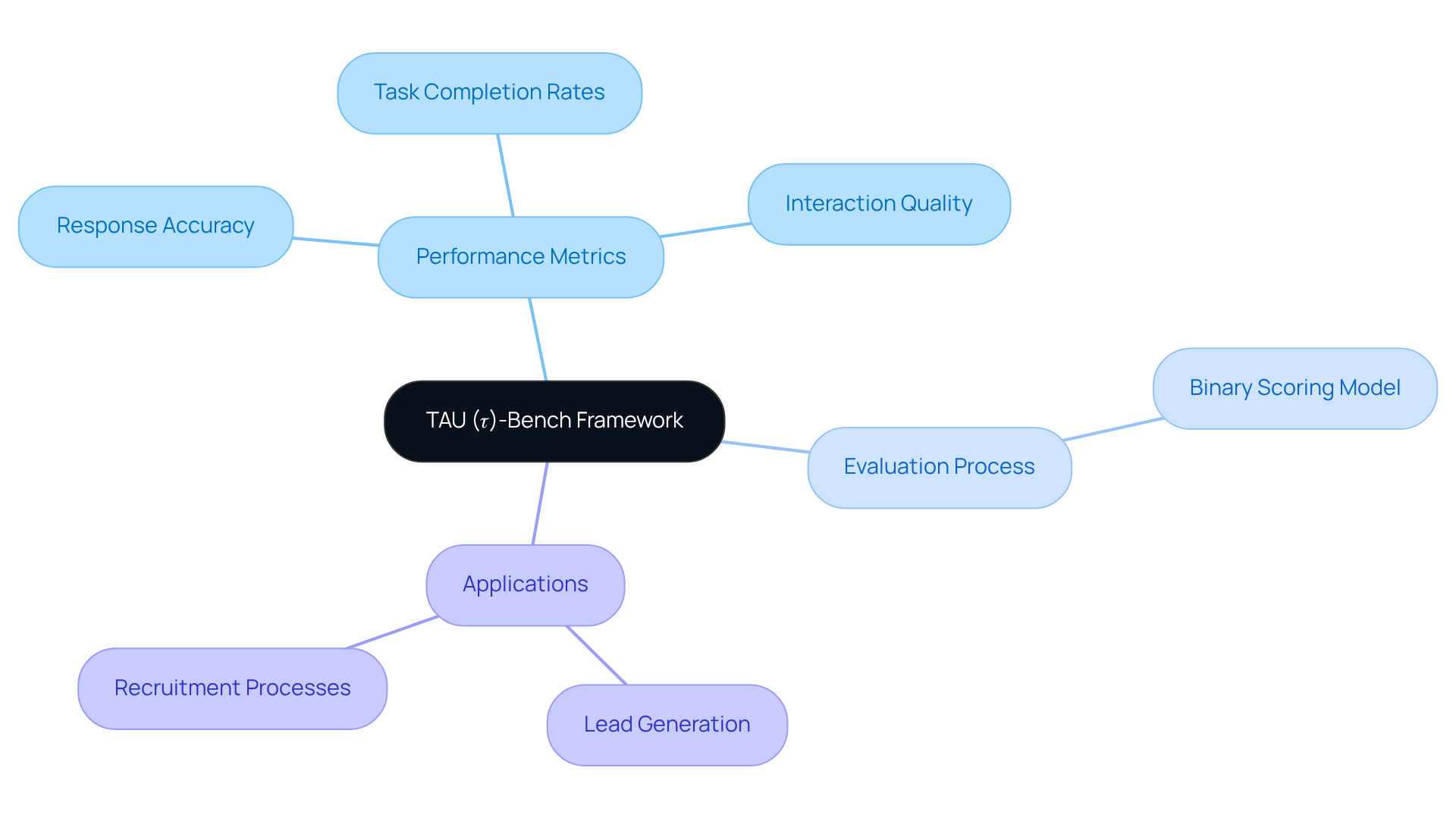

TAU (𝜏)-Bench: Benchmarking AI Agents for Reliability Insights

The TAU (𝜏)-Bench framework is a vital tool for evaluating API reliability benchmarks for AI tools in real-world applications, particularly in enhancing lead generation and recruitment processes. It meticulously assesses key performance metrics, including:

- Response accuracy

- Task completion rates

- Interaction quality

To eliminate ambiguity in evaluations, a binary scoring model is employed, ensuring clarity throughout the assessment process.

By utilizing the TAU-bench, organizations can gain crucial insights into the operational effectiveness of their AI agents across diverse scenarios, including those powered by Websets. This benchmarking approach not only identifies strengths and weaknesses but also informs strategic decisions regarding the selection and deployment of tools based on API reliability benchmarks for AI tools.

Moreover, the TAU-bench framework is customizable, enabling organizations to tailor benchmarks to specific fields or use cases. For example, companies leveraging these metrics can refine their sales processes by ensuring that their AI agents meet defined performance standards. This ultimately leads to enhanced customer interactions and operational efficiency, especially when harnessing Websets' AI-powered sales intelligence to filter by hyper-specific criteria for precise lead generation.

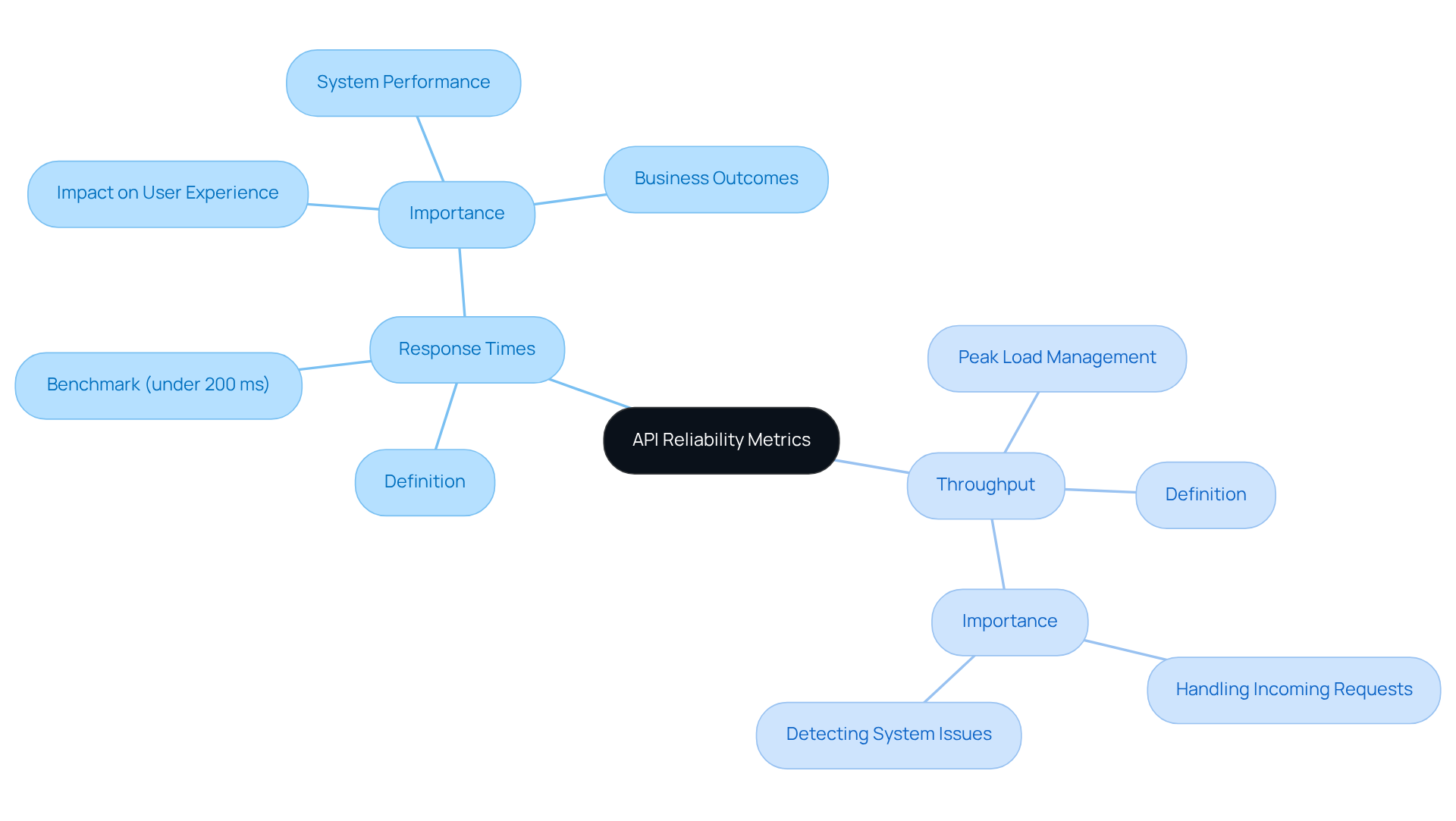

Response Times and Throughput: Core Metrics for API Reliability

Response times and throughput are critical metrics for assessing the API reliability benchmarks for AI tools. Why? Because response time measures how swiftly an API reacts to requests, while throughput reveals the number of requests processed within a specific timeframe. For optimal performance, APIs should strive for a response time of under 200 milliseconds and a throughput capable of managing peak loads without degradation.

Monitoring these metrics is not just a best practice; it’s essential for organizations aiming to support high-demand applications effectively, particularly in light of API reliability benchmarks for AI tools. By keeping a close eye on response times and throughput, businesses can ensure their APIs remain reliable and efficient, ultimately enhancing user satisfaction and operational success.

Error Rates and System Stability: Indicators of API Reliability

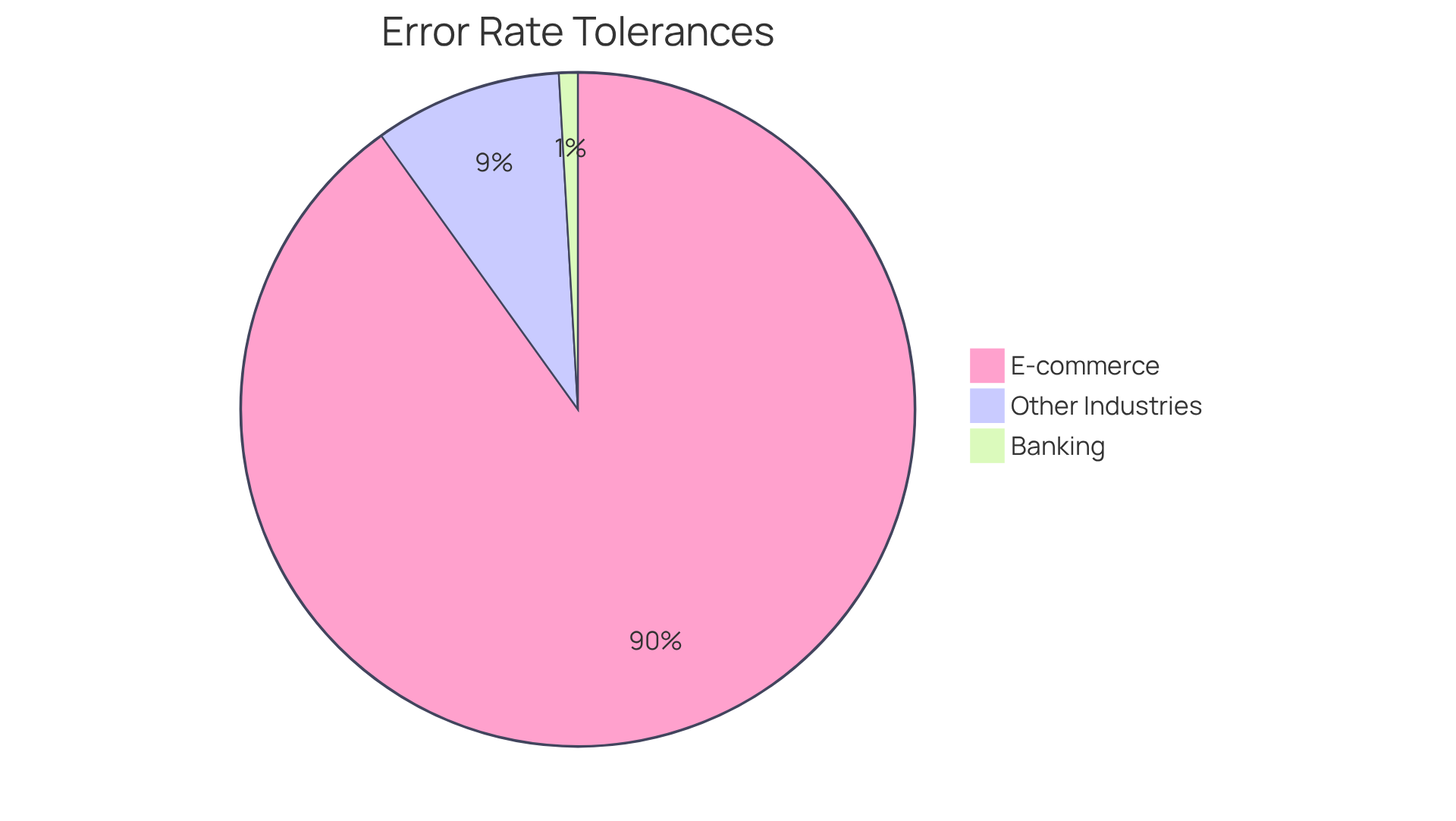

Error rates and system stability serve as vital indicators for API reliability benchmarks for AI tools. Organizations strive to keep error rates below 1% to ensure an optimal user experience. In the banking sector, stringent error management is paramount, with a target error rate of just 0.1%. This commitment fosters user trust and enhances security. Conversely, e-commerce applications may tolerate a higher error rate of up to 10%, highlighting the diverse standards across industries.

Key metrics for assessing system stability include uptime and the frequency of service interruptions. Regular monitoring of these indicators empowers businesses to proactively identify and address potential issues, preserving operational efficiency. Effective strategies for maintaining low error rates involve implementing robust oversight tools that track performance metrics in real-time. This allows teams to respond swiftly to anomalies, ensuring a seamless experience for users.

Organizations in the FinTech sector exemplify best practices by utilizing comprehensive API reliability benchmarks for AI tools to evaluate performance. These systems ensure high availability and rapid incident resolution, often addressing issues in under five minutes. Additionally, integrating proactive alert systems and considering geographical distribution are essential strategies for achieving high availability.

By prioritizing these metrics and practices, companies can significantly enhance their API reliability benchmarks for AI tools. This commitment not only improves operational efficiency but also provides users with seamless digital experiences. Are you ready to elevate your API performance?

CPU and Memory Usage: Evaluating API Reliability Under Load

Effective oversight of CPU and memory usage is essential for assessing API reliability benchmarks for AI tools, particularly during peak loads. High CPU utilization can lead to slower response times and increased latency. In fact, as CPU usage rises, latency often follows suit, which can significantly degrade user experience. Excessive memory consumption can also result in system crashes, further compromising service quality.

Consider the example of AWS Performance on Prime Day 2024, where the platform managed over 1.3 trillion invocations. This underscores the necessity of robust resource oversight tools to efficiently handle such high traffic volumes. Organizations that have adopted systematic resource tracking tools report substantial improvements in API reliability benchmarks for AI tools, enabling them to manage high traffic without sacrificing service quality.

By optimizing resource allocation, businesses can enhance API reliability benchmarks for AI tools and improve overall user satisfaction. This proactive approach not only mitigates potential performance bottlenecks but also ensures that APIs remain responsive and efficient, even under demanding conditions. Notably, the average API uptime targeted by businesses is 99.46%, which aligns with the API reliability benchmarks for AI tools, while API errors account for 67% of monitoring errors. This highlights the critical need for effective monitoring strategies.

Are you ready to elevate your API performance? Implementing these strategies can make a significant difference in your service quality.

![]()

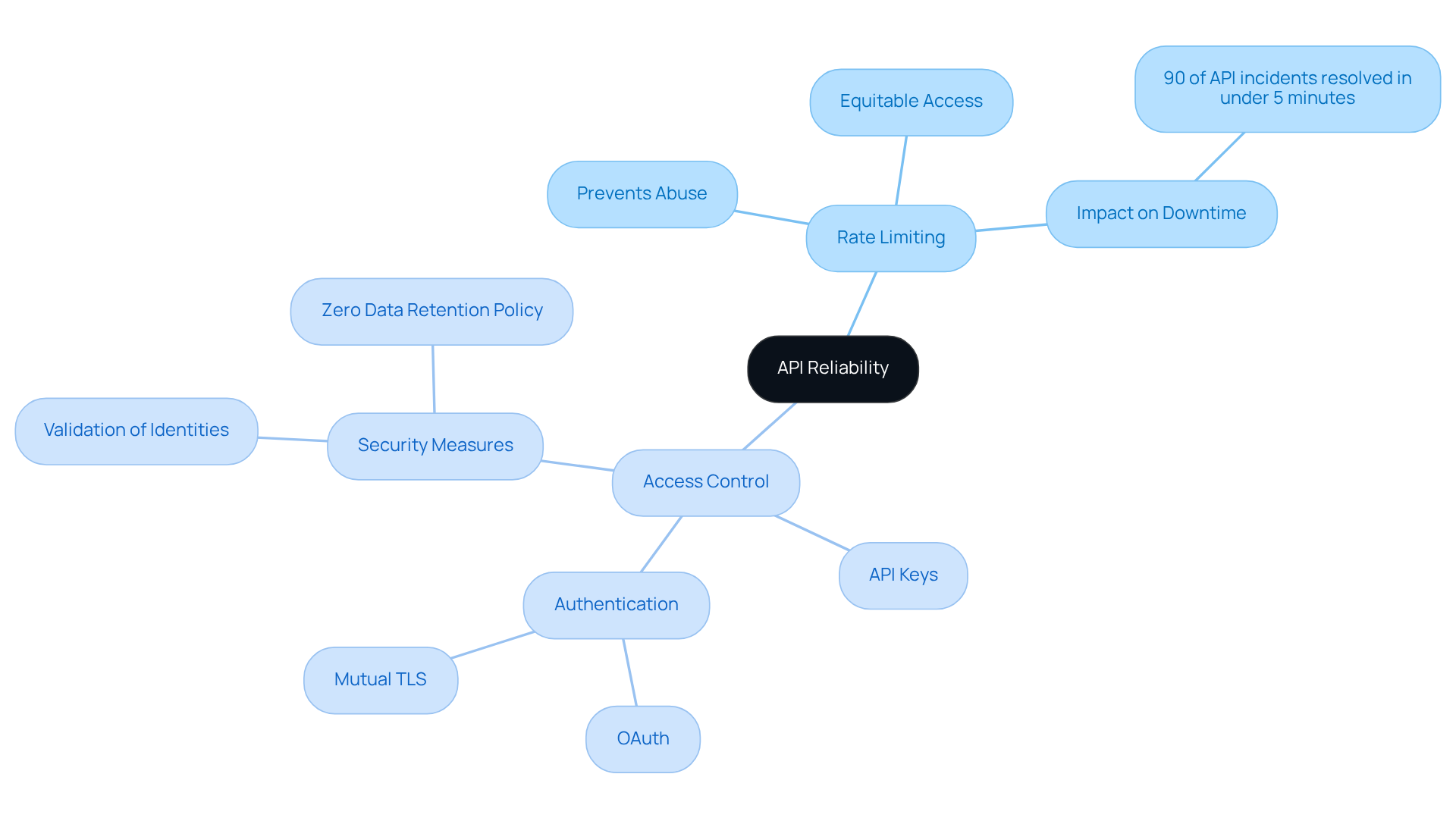

Rate Limiting and Access Control: Benchmarks for API Reliability

Rate limiting and access control are essential for ensuring API reliability. By controlling the number of requests an individual can make within a specified timeframe, rate limiting effectively prevents abuse and ensures equitable access to resources. This practice is crucial; organizations that implement rate limits can significantly reduce the risk of downtime and enhance overall service stability. For example, industries like FinTech have shown that effective rate limiting can resolve 90% of API incidents in under five minutes, showcasing high operational maturity.

Access control mechanisms, such as API keys and authentication, are vital for safeguarding sensitive data and preventing unauthorized access. By enforcing strict authentication protocols, organizations can mitigate risks associated with API security incidents, which 99% of surveyed organizations reported in the past year. Implementing robust authentication methods like OAuth and mutual TLS ensures that only authorized individuals can access specific data, minimizing the risk of data breaches.

Moreover, current access control measures for B2B APIs underscore the importance of validating identities and permissions for every API call. This layered approach not only enhances security but also builds confidence among users, assuring them that their data is protected. 'Websets' Zero Data Retention policy further strengthens this commitment to security, allowing for the automatic deletion of queries and data based on individual requirements. This practice helps maintain compliance with industry standards, including SOC2 Certification and Enterprise Data Processing Agreements. By prioritizing the API reliability benchmarks for AI tools, organizations can significantly improve their API dependability and create a secure environment for all users.

Logging and Monitoring: Tools for Ensuring API Reliability

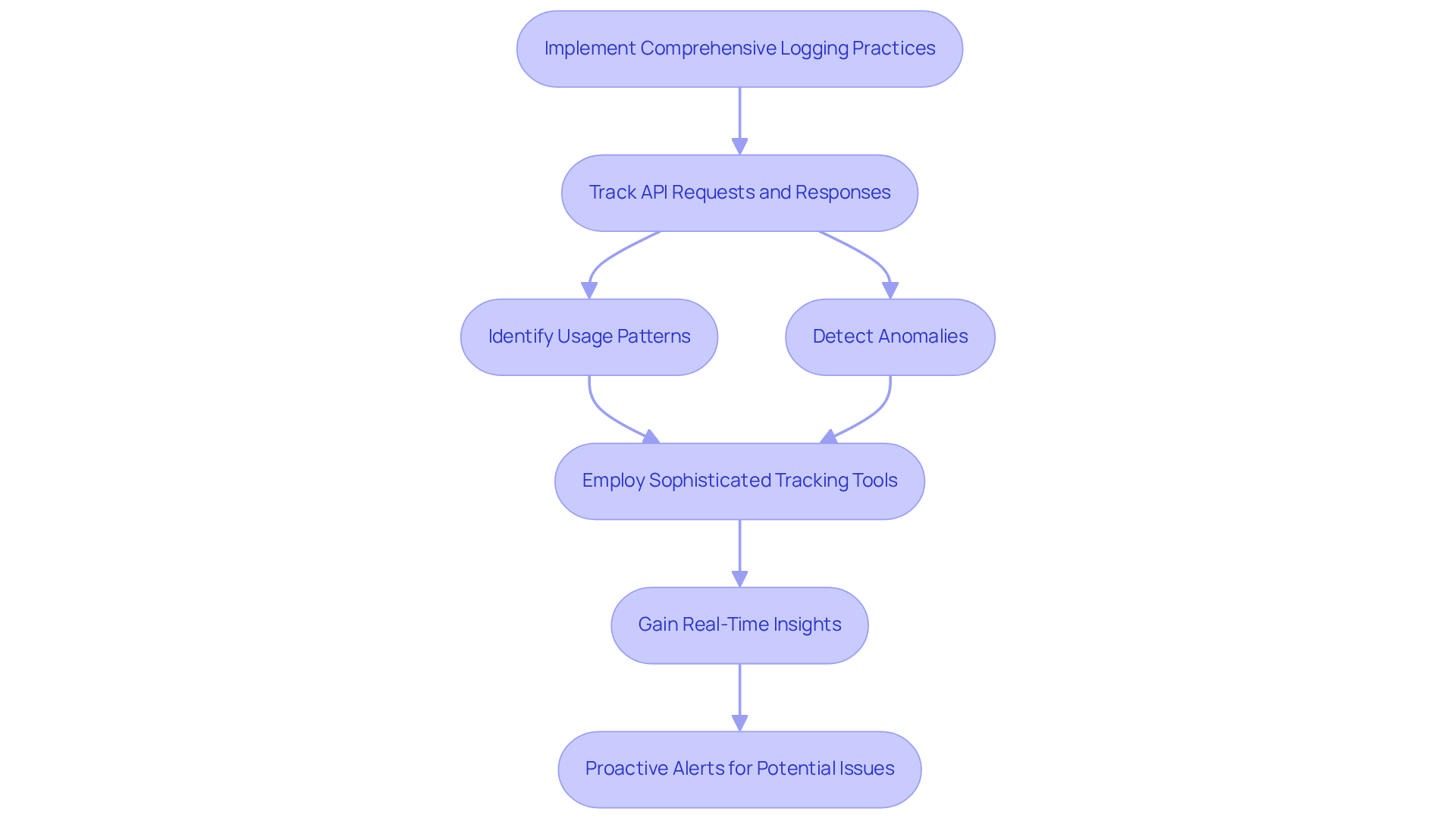

Logging and oversight are not just important; they are essential for ensuring API stability and performance. Organizations must adopt comprehensive logging practices to meticulously track API requests and responses. This approach enables the identification of usage patterns and the detection of anomalies. Did you know that 86% of developers expect their use of APIs to increase? This statistic underscores the growing reliance on these systems.

Businesses that employ sophisticated tracking tools gain real-time insights into API performance. This capability facilitates proactive alerts for potential issues before they escalate into major problems. Such methods not only sustain high levels of API dependability but also significantly enhance client satisfaction by meeting API reliability benchmarks for AI tools. As API dependencies expand, the importance of efficient logging and oversight becomes increasingly clear. Many organizations recognize that these practices are vital for meeting rising user demands and ensuring seamless digital experiences.

Consider a case study on real-time monitoring for medical device APIs. It illustrates the critical nature of immediate oversight, ensuring that any performance issues are addressed promptly to maintain reliability and safety. In a landscape where API performance can significantly affect user experience, investing in robust logging and oversight is imperative to achieve API reliability benchmarks for AI tools.

Conclusion

Implementing API reliability benchmarks is essential for boosting the performance and effectiveness of AI tools in sales. By concentrating on critical metrics such as uptime, response time, and error rates, organizations can ensure their APIs operate at peak efficiency. This leads to enhanced user experiences and higher-quality lead generation. Moreover, integrating advanced features - like those provided by Websets - empowers businesses to leverage these benchmarks for strategic advantages in a competitive landscape.

Throughout this article, we've highlighted various essential benchmarks, including:

- Performance under pressure

- Security compliance

- Effective monitoring practices

Each of these elements is vital for maintaining API reliability, which ultimately translates into improved operational efficiency and customer satisfaction. By adopting frameworks like TAU (𝜏)-Bench, organizations can systematically evaluate their AI tools, pinpointing strengths and areas for improvement while ensuring compliance with industry standards.

In conclusion, prioritizing API reliability is not just a technical necessity; it’s a strategic imperative for businesses aiming to excel in sales and lead generation. Organizations are urged to embrace these benchmarks and best practices, fostering a culture of continuous improvement and innovation. By doing so, they can enhance their API performance, secure sensitive data, and ultimately drive greater success in their sales efforts.

Frequently Asked Questions

What are the key API reliability metrics that Websets focuses on for lead generation?

Websets focuses on uptime, response time, and error rates as key API reliability metrics.

Why is uptime important for API reliability?

Uptime, the percentage of time the API is fully functional, is crucial because even a minor dip can lead to significant downtime, eroding trust and impacting revenue.

How does response time affect user satisfaction?

Response time measures how quickly the API processes requests; shorter response times correlate with greater satisfaction and engagement.

What do error rates indicate in the context of API reliability?

Error rates track the frequency of failed requests, which can severely diminish user experience and the quality of leads generated.

How does Websets ensure the reliability of its platform?

Websets implements continuous monitoring of uptime, response time, and error rates to guarantee a dependable and efficient platform that meets API reliability benchmarks for AI tools.

What additional features does Websets offer for lead generation?

Websets offers AI-powered sales intelligence search that allows users to pinpoint companies and individuals meeting hyper-specific criteria, enriching searches with detailed information like emails, company details, and positions.

How does Websets perform under high-load scenarios?

Websets maintains optimal performance even during high-load scenarios with flexible, high-capacity rate limits, ensuring efficient processing of API requests.

What is the significance of real-time data processing for businesses?

Real-time data processing is crucial for companies that depend on timely insights for decision-making, particularly in sales and marketing, as it can significantly impact results.

What security and compliance standards does Websets adhere to?

Websets demonstrates a strong commitment to security and compliance, boasting SOC2 certification and tailored support solutions.

What benchmarks should organizations prioritize when evaluating AI tools?

Organizations should prioritize uptime (ideally surpassing 99.9%), response time (under 200 milliseconds), error rates (below 1%), and customer satisfaction scores when evaluating AI tools.