Introduction

Understanding the reliability of datasets is crucial. In a landscape where data-driven decisions shape business strategies, organizations must prioritize accuracy, completeness, and consistency. But with the vast amounts of data available, how can they ensure their insights are based on trustworthy information?

This guide offers a comprehensive approach to mastering lead dataset reliability evaluation. By exploring effective evaluation metrics and testing methods, we will reveal the path to achieving high data integrity. It's time to take action and ensure that your decisions are grounded in reliable data.

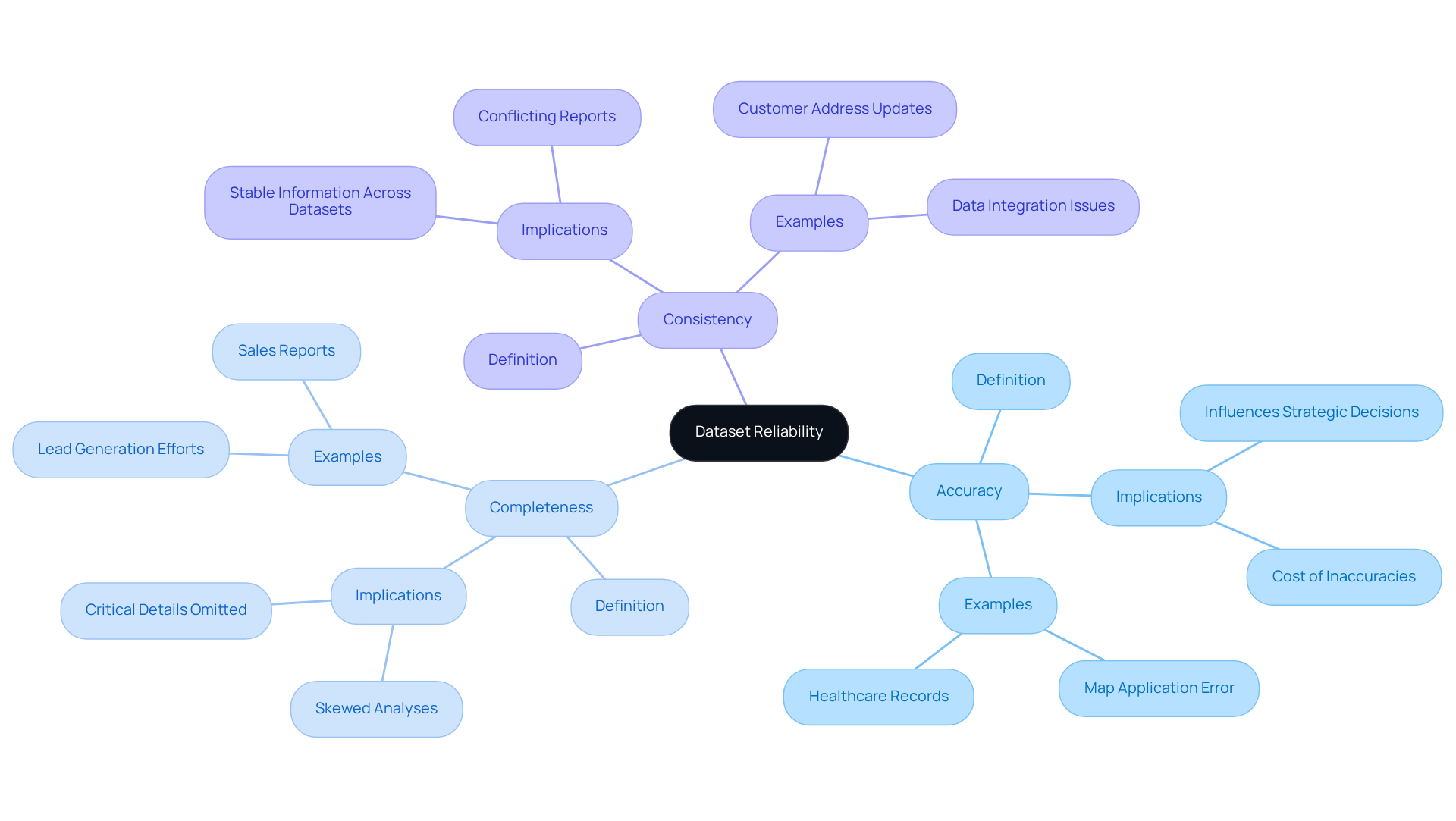

Define Dataset Reliability

The dependability of a dataset hinges on how much we can trust the information to be precise, complete, and consistent over time. This reliability is assessed through several key dimensions:

-

Accuracy: This dimension ensures that the data accurately reflects the real-world entities or events it is meant to represent. In market research, for example, precise information can significantly influence strategic decisions. Inaccuracies can lead to misguided conclusions, potentially costing businesses valuable opportunities.

-

Completeness: Completeness refers to the presence of all necessary information points, ensuring that no critical details are omitted. Incomplete data collections can skew analyses and create gaps in insights. This is particularly detrimental in lead generation efforts, where every detail counts and can make or break a campaign.

-

Consistency: Consistency guarantees that information remains stable across various datasets and over time, yielding similar results under the same conditions. For instance, if a customer's address is modified in one system but not in another, it can generate conflicting reports that undermine confidence in the information.

Understanding these dimensions is vital for assessing the quality of any information collection, particularly in lead dataset reliability evaluation and market research. Dependable information is essential for informed decision-making. As industry specialists emphasize, upholding high standards of accuracy and completeness not only enhances data trustworthiness but also boosts overall operational efficiency. Are you ready to elevate your data quality standards?

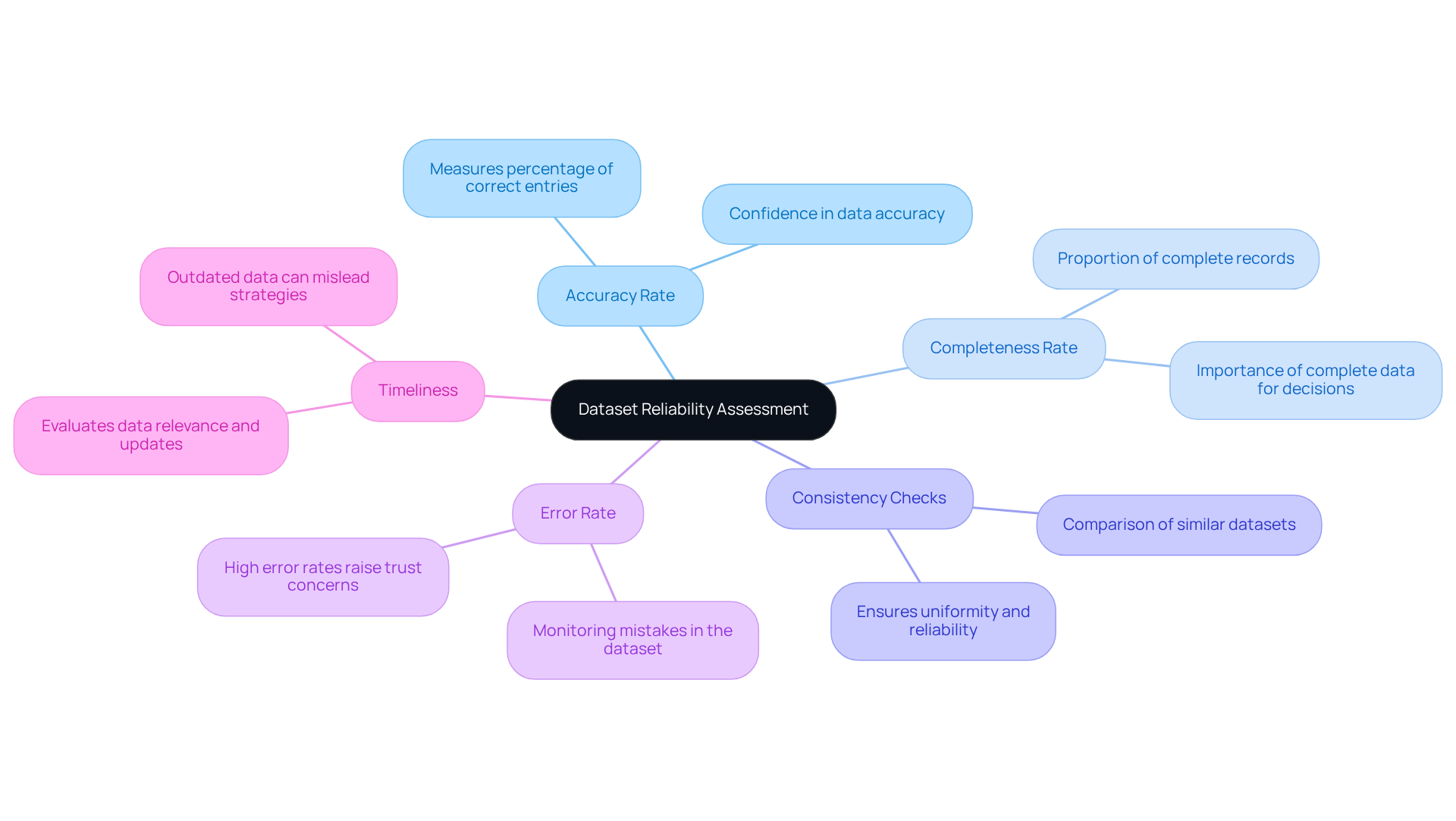

Assess Evaluation Criteria and Metrics

To effectively assess dataset reliability, it’s crucial to consider key evaluation criteria and metrics that can guide your analysis:

- Accuracy Rate: This metric measures the percentage of correct entries in your dataset compared to a verified source. Are you confident in the accuracy of your data?

- Completeness Rate: Calculate the proportion of complete records against the total number of records. Incomplete data can lead to misguided decisions.

- Consistency Checks: Compare datasets collected under similar conditions to ensure they yield the same results. Consistency is a hallmark of reliable data.

- Error Rate: Monitor the occurrence of mistakes within the dataset. A high error rate can raise trustworthiness concerns.

- Timeliness: Evaluate whether the data is up-to-date and relevant for your current decision-making needs. Outdated data can mislead your strategies.

By applying these metrics, you can lead dataset reliability evaluation and establish a robust framework for assessing the dependability of your datasets. This proactive approach not only enhances your data integrity but also empowers informed decision-making.

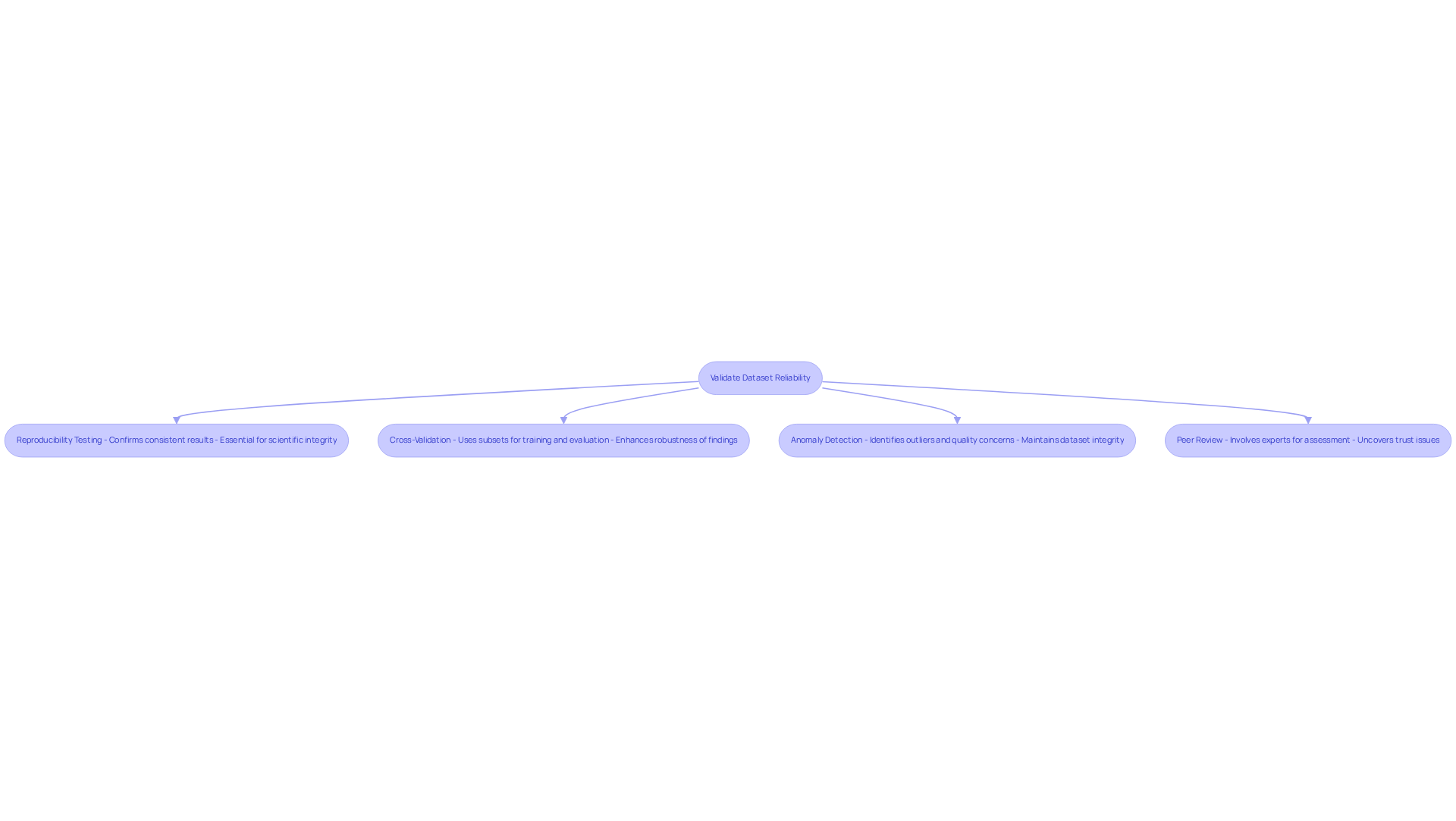

Validate Dataset Reliability Through Testing

To validate the reliability of your dataset, consider implementing these essential testing methods:

-

Reproducibility Testing: Conduct tests to confirm that the same dataset yields consistent results across multiple analyses. This is crucial for building trust in your information, as reproducibility is a cornerstone of scientific integrity. Recent findings reveal that the reproducibility rate across various experiments can range from 15% to 45%. This stark statistic underscores the necessity of rigorous testing to lead dataset reliability evaluation.

-

Cross-Validation: Utilize different subsets of your data to train and evaluate models. This method guarantees that results remain consistent across various samples, thereby enhancing the robustness of your findings. Current trends highlight the effectiveness of k-fold cross-validation, where data is divided into k subsets, allowing for comprehensive testing and validation. As Jay Pujara, Director of the Center on Knowledge Graphs, states, "People will not believe in science if we can’t demonstrate that scientific research is reproducible."

-

Anomaly Detection: Employ algorithms designed to identify outliers or unexpected values that may signal quality concerns. This proactive approach is vital for maintaining the integrity of your dataset, as it flags potential inaccuracies before they can impact decision-making.

-

Peer Review: Involve colleagues or industry experts to assess your information. Their insights can uncover trust issues that may not be immediately apparent, fostering a collaborative approach to data validation. A case study on assessing reproducibility in research illustrates how peer review can enhance the credibility of findings, ensuring that data-driven decisions are based on reliable information.

By employing these testing techniques, you can lead dataset reliability evaluation to verify the trustworthiness of your datasets. This ultimately boosts your confidence in the data-driven choices that shape your business strategies.

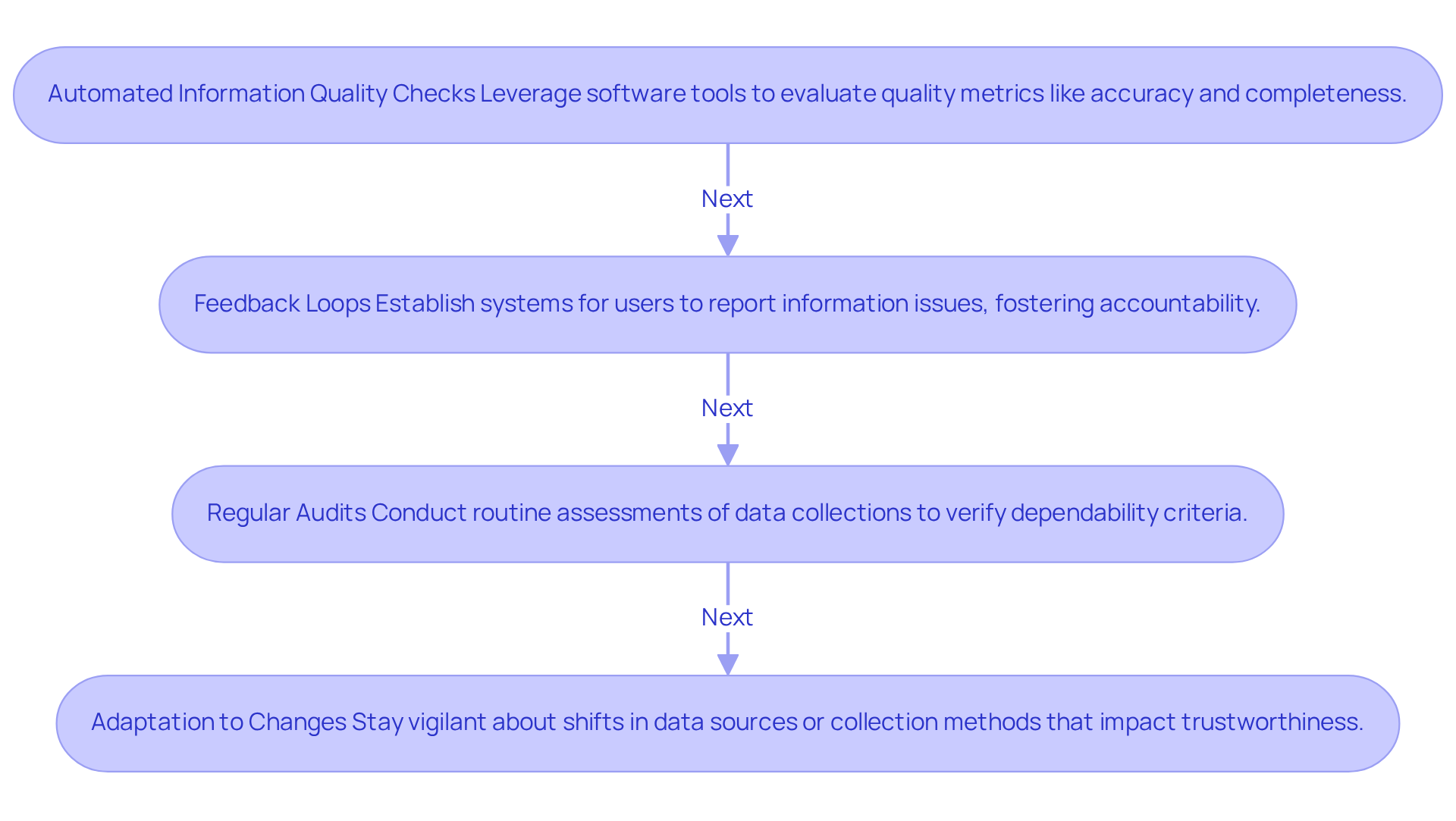

Implement Continuous Monitoring and Adjustments

To ensure dataset reliability, consider implementing these continuous monitoring strategies:

-

Automated Information Quality Checks: Leverage software tools to consistently evaluate quality metrics like accuracy and completeness. This proactive approach not only identifies issues early but also reinforces data integrity.

-

Feedback Loops: Establish systems that empower users to report information issues. This facilitates swift identification and resolution of trustworthiness problems, fostering a culture of accountability.

-

Regular Audits: Conduct routine assessments of data collections to verify they meet established dependability criteria. Adjust procedures as necessary to adapt to evolving standards and practices.

-

Adaptation to Changes: Stay vigilant about shifts in data sources or collection methods that could impact trustworthiness. Adjust your evaluation criteria accordingly to maintain high standards.

By adopting these practices, you can lead dataset reliability evaluation to ensure your datasets remain reliable, ultimately supporting effective decision-making. Are you ready to enhance your data reliability?

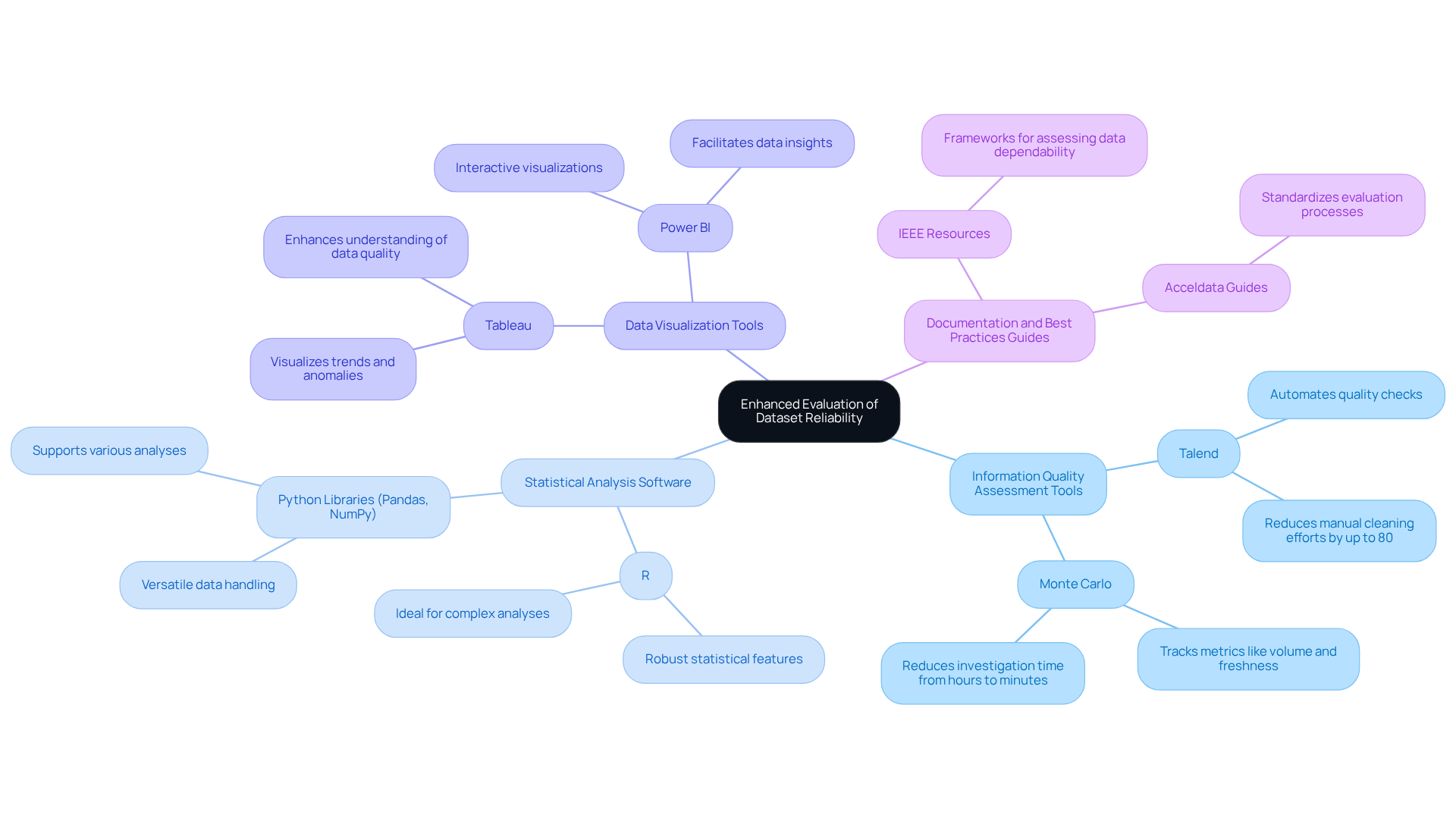

Utilize Tools and Resources for Enhanced Evaluation

To enhance your evaluation of dataset reliability, consider leveraging essential tools and resources:

-

Information Quality Assessment Tools: Platforms like Talend and Monte Carlo are vital for monitoring quality metrics and automating checks. These tools can reduce manual information cleaning efforts by up to 80%, ensuring your datasets are precise and dependable. Strive for over 98% accuracy in quality measurements to uphold high standards.

-

Statistical Analysis Software: Utilize tools such as R or Python libraries (e.g., Pandas, NumPy) to conduct complex analyses that validate the reliability of your information. As Will Harris emphasizes, starting with targeted solutions for your most valuable data is crucial. R is favored for its robust statistical features, while Python offers versatility in data handling and analysis.

-

Data Visualization Tools: Employ visualization software like Tableau or Power BI to visually identify trends and anomalies in your datasets. Effective visualization can reveal insights that might be missed in raw data, enhancing your understanding of information quality.

-

Documentation and Best Practices Guides: Refer to resources from organizations like IEEE or Acceldata for best practices in assessing data dependability. These guides offer valuable frameworks and methodologies that can standardize your evaluation processes.

By utilizing these tools, you can streamline your evaluation processes and lead dataset reliability evaluation to ensure your datasets meet high reliability standards. This ultimately supports better decision-making and operational efficiency.

Conclusion

Ensuring the reliability of datasets is not just important; it’s essential for effective decision-making and operational success. By grasping the key dimensions of dataset reliability - accuracy, completeness, and consistency - organizations can create a data environment that fosters trust and informed choices. Implementing structured evaluation criteria and metrics, such as accuracy rates and consistency checks, significantly enhances the ability to assess and maintain data integrity.

This article outlines crucial steps for mastering lead dataset reliability evaluation. Validation through rigorous testing methods and continuous monitoring strategies is key. Techniques like reproducibility testing, anomaly detection, and feedback loops empower organizations to proactively identify and rectify issues. Moreover, leveraging specialized tools and resources streamlines the evaluation process, ensuring datasets remain precise and dependable.

In a landscape where data-driven decisions are paramount, prioritizing dataset reliability is not merely beneficial; it’s necessary. Embracing these practices fortifies data integrity and enhances overall operational efficiency. Organizations must take action - implement these strategies, utilize the recommended tools, and foster a culture of continuous improvement to elevate their data quality standards and achieve sustainable success.

Frequently Asked Questions

What is dataset reliability?

Dataset reliability refers to the trustworthiness of information within a dataset, assessed based on its accuracy, completeness, and consistency over time.

What are the key dimensions of dataset reliability?

The key dimensions of dataset reliability are accuracy, completeness, and consistency. Accuracy ensures the data reflects real-world entities accurately, completeness ensures all necessary information is present, and consistency guarantees stability of information across datasets.

Why is accuracy important in dataset reliability?

Accuracy is crucial because it ensures that the data accurately represents real-world entities or events. Inaccurate data can lead to misguided conclusions and potentially cost businesses valuable opportunities.

What does completeness mean in the context of dataset reliability?

Completeness refers to having all necessary information points in a dataset. Incomplete data can skew analyses and create gaps in insights, which is particularly detrimental in areas like lead generation.

How does consistency affect dataset reliability?

Consistency ensures that information remains stable across various datasets and over time. Inconsistent information can lead to conflicting reports, undermining confidence in the data.

What metrics can be used to assess dataset reliability?

Key metrics for assessing dataset reliability include accuracy rate, completeness rate, consistency checks, error rate, and timeliness.

How is the accuracy rate calculated?

The accuracy rate measures the percentage of correct entries in a dataset compared to a verified source.

What is the significance of the completeness rate?

The completeness rate calculates the proportion of complete records against the total number of records, highlighting the extent of any missing information that could lead to misguided decisions.

What are consistency checks?

Consistency checks involve comparing datasets collected under similar conditions to ensure they yield the same results, which is a hallmark of reliable data.

Why is timeliness important in dataset reliability?

Timeliness evaluates whether the data is current and relevant for decision-making. Outdated data can mislead strategies and affect outcomes.