Introduction

Proxies are vital conduits connecting users to the vast expanse of the internet, especially in data collection and competitive intelligence. As sales leaders increasingly turn to Python Requests to navigate these digital waters, mastering proxy usage becomes essential for effective web scraping and information gathering. However, managing proxies introduces complexities, including the challenge of ensuring seamless integration and overcoming common pitfalls.

How can sales teams harness these powerful tools to enhance their lead generation strategies while maintaining compliance and avoiding detection? By understanding the intricacies of proxy management, teams can unlock new opportunities for data-driven decision-making and competitive advantage.

In this landscape, the ability to effectively utilize proxies not only streamlines the data collection process but also safeguards against potential risks. Embracing this knowledge empowers sales professionals to navigate the digital realm with confidence, ensuring they remain ahead in the competitive market.

Understand Proxies and Their Purpose in Python Requests

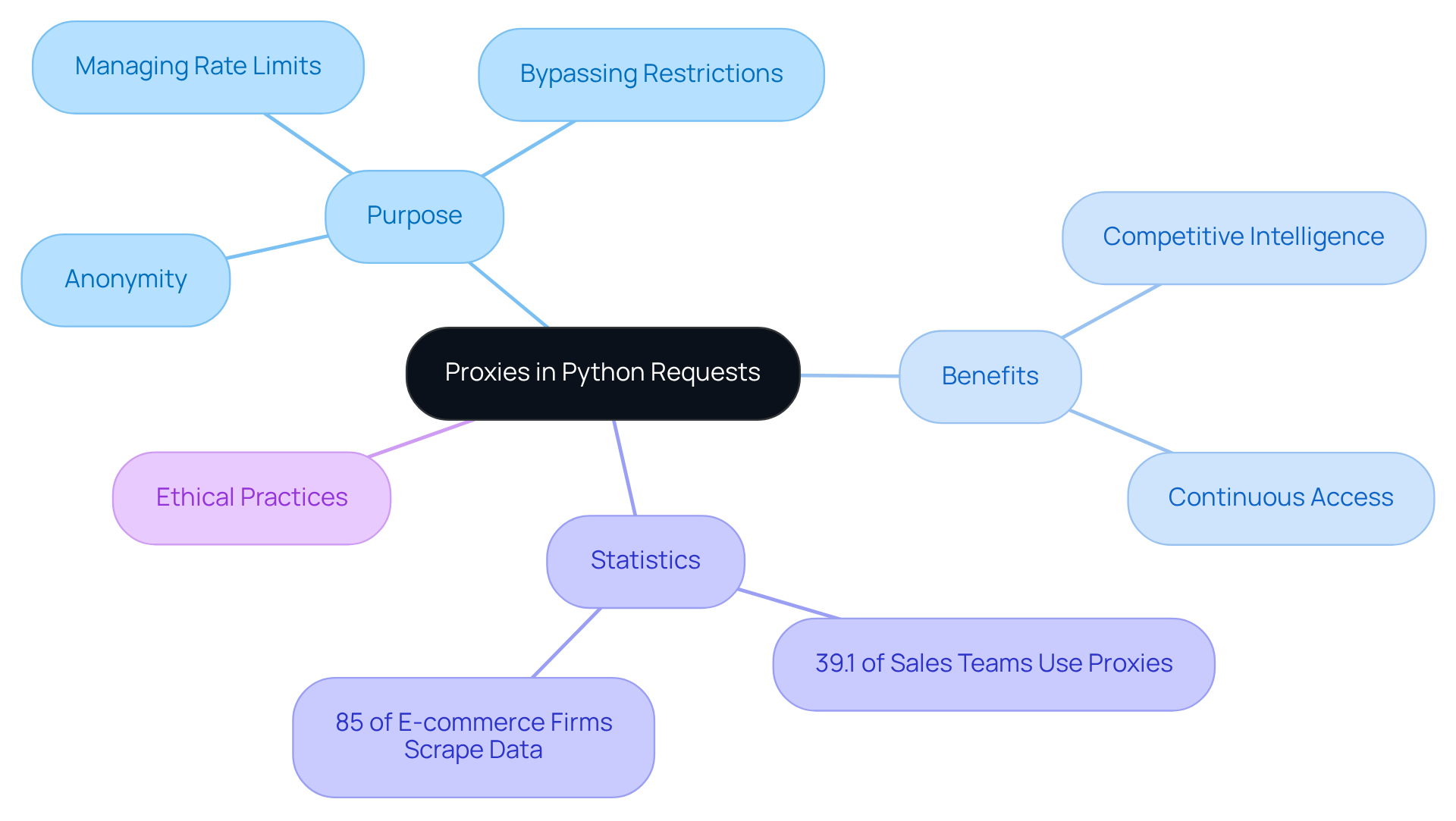

Proxies act as vital intermediaries between your computer and the internet, allowing you to send requests through various IP addresses. This functionality is essential for web scraping, especially when trying to avoid detection or IP bans from target websites. In the realm of Python Requests, a proxy with Python Requests not only provides anonymity but also helps bypass geographical restrictions and manage rate limits imposed by servers.

As of 2026, approximately 39.1% of sales teams utilize intermediary providers for information collection, underscoring their significance in competitive intelligence. Notably, 85% of e-commerce firms actively monitor competitor pricing and promotions through scraping, leveraging alternative servers to maintain a discreet presence while gathering crucial market insights. Industry leaders emphasize that intermediaries are indispensable for ensuring online anonymity. F5 Labs aptly states, "The distinction between beneficial and harmful bots is context, not code," highlighting the importance of ethical information-gathering practices.

By mastering the use of intermediaries, sales teams can secure reliable and continuous access to essential information, ultimately enhancing their strategic decision-making capabilities. Furthermore, with Websets' enterprise-grade AI-driven web search solutions-featuring custom semantic search and AI responses-sales teams can harness advanced information discovery tools that comply with industry standards, including SOC2 certification and comprehensive Data Processing Agreements.

As the compliance landscape evolves in 2026, the demand for transparent, permission-based access to information grows increasingly critical. This reinforces the role of intermediaries in responsible information collection. Are you ready to leverage these tools for your competitive advantage?

Install and Configure Python Requests Library

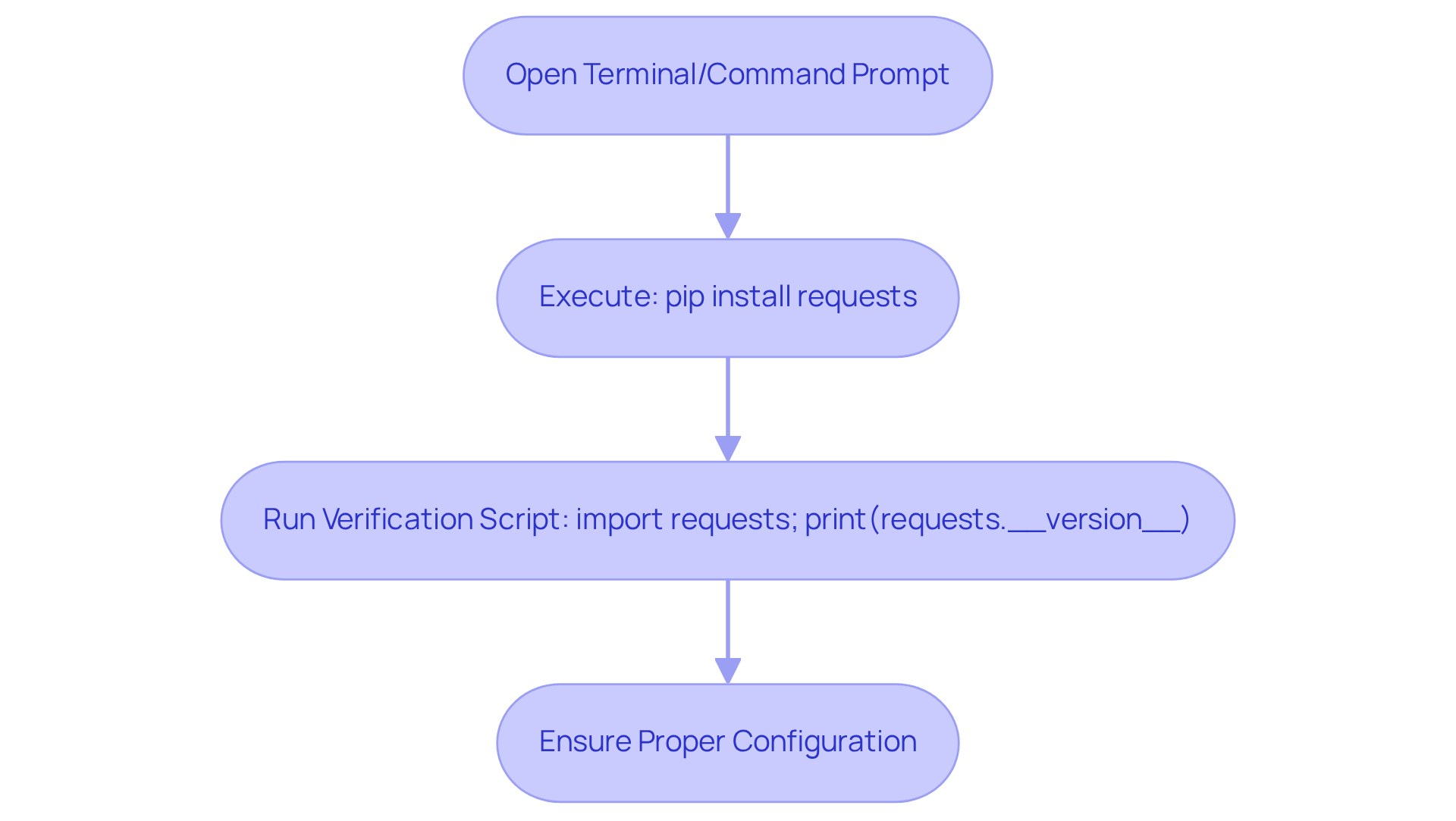

To effectively utilize the Python Requests library, installation is your first step. Open your terminal or command prompt and execute:

pip install requests

Once installed, it’s crucial to verify that the library is set up correctly. Run this simple script:

import requests

print(requests.__version__)

This script will display the version of the Requests library currently installed. Ensuring your Python setup is correctly arranged is vital, especially as you integrate tools for information gathering in your B2B lead generation efforts.

Did you know that 83% of respondents are not using the latest version of Python? This statistic underscores the importance of keeping your tools updated. The Requests library is a favorite among sales teams, with many professionals using it as a proxy with Python Requests to enhance their information collection processes. As of 2026, the library continues to receive updates that improve its functionality, including performance enhancements and user-friendliness.

Developers stress the significance of configuring libraries like Requests to optimize performance and ensure seamless integration into their workflows. To manage dependencies effectively, it’s recommended to use a virtual environment or container when installing the latest version of Python. This approach not only streamlines your setup but also enhances your overall productivity.

Implement Proxies in Python Requests: Methods and Examples

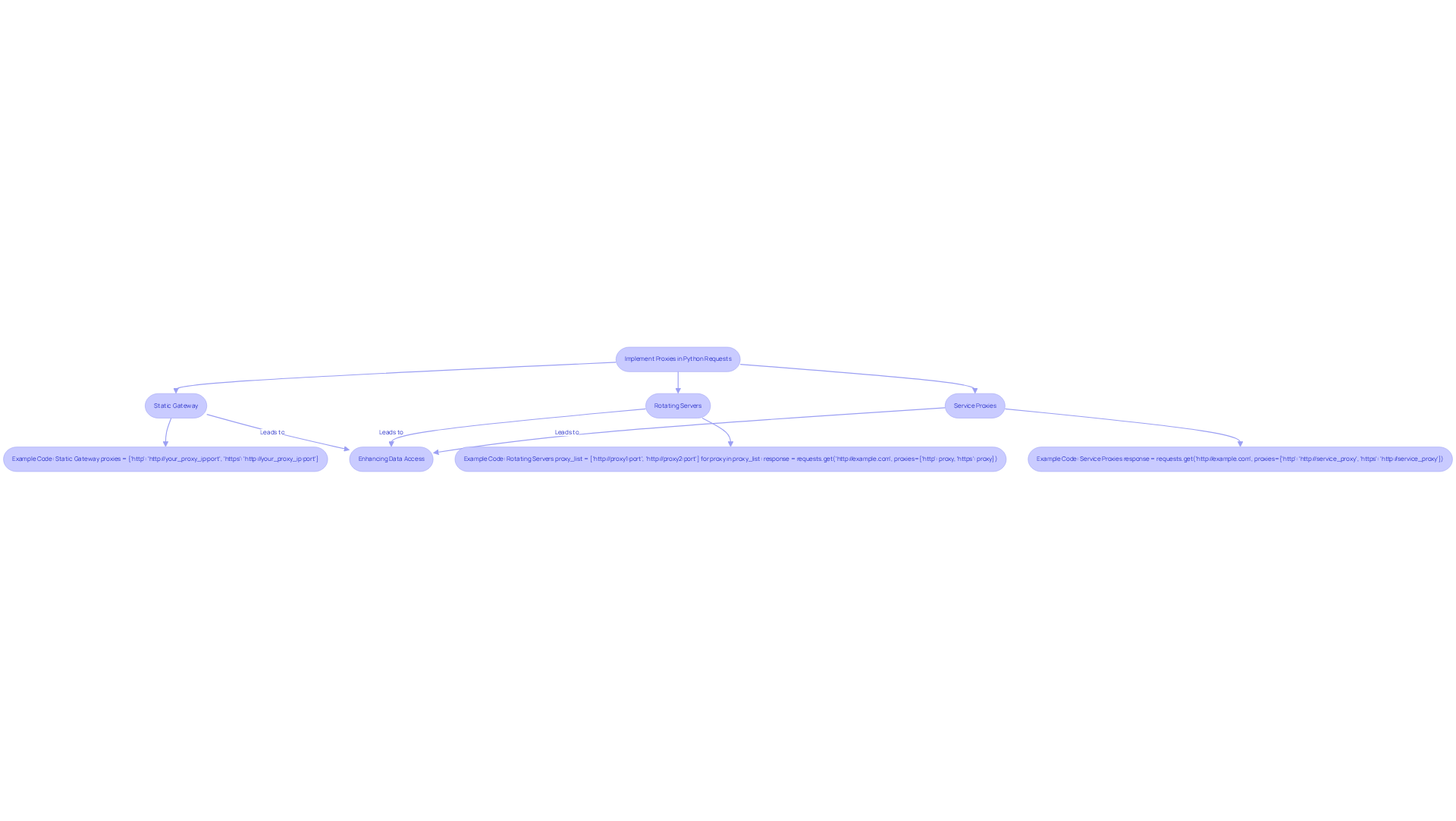

Using a proxy with python requests can significantly enhance your data access capabilities for lead generation, especially when combined with the powerful Websets API. Here’s how you can effectively utilize proxies:

-

Static Gateway: This straightforward approach routes all requests through a single intermediary, ensuring a consistent IP address. For example:

proxies = {'http': 'http://your_proxy_ip:port', 'https': 'http://your_proxy_ip:port'} response = requests.get('http://example.com', proxies=proxies) -

Rotating Servers: To minimize the risk of bans, consider using rotating servers. This method involves cycling through a list of intermediaries, which enhances anonymity and reduces detection. Here’s how:

proxy_list = ['http://proxy1:port', 'http://proxy2:port'] for proxy in proxy_list: response = requests.get('http://example.com', proxies={'http': proxy, 'https': proxy}) -

Many services provide rotating proxies that can be seamlessly integrated as a proxy with python requests. Just follow the API documentation from the service for smooth integration. For instance:

response = requests.get('http://example.com', proxies={'http': 'http://service_proxy', 'https': 'http://service_proxy'})

Implementing these methods not only streamlines your data gathering process but also boosts your ability to access critical sales information. By leveraging the Websets API, which offers features like high-capacity rate limits and premium support, you can swiftly and accurately obtain relevant information, enhancing your lead generation efforts. In fact, statistics indicate that using rotating servers can improve data access efficiency by up to 99.98%, making them an essential tool for sales teams looking to refine their lead generation strategies. Without intermediaries, you risk facing issues such as server blocks or connection errors, underscoring the necessity of using intermediaries in web scraping.

Troubleshoot Common Proxy Issues in Python Requests

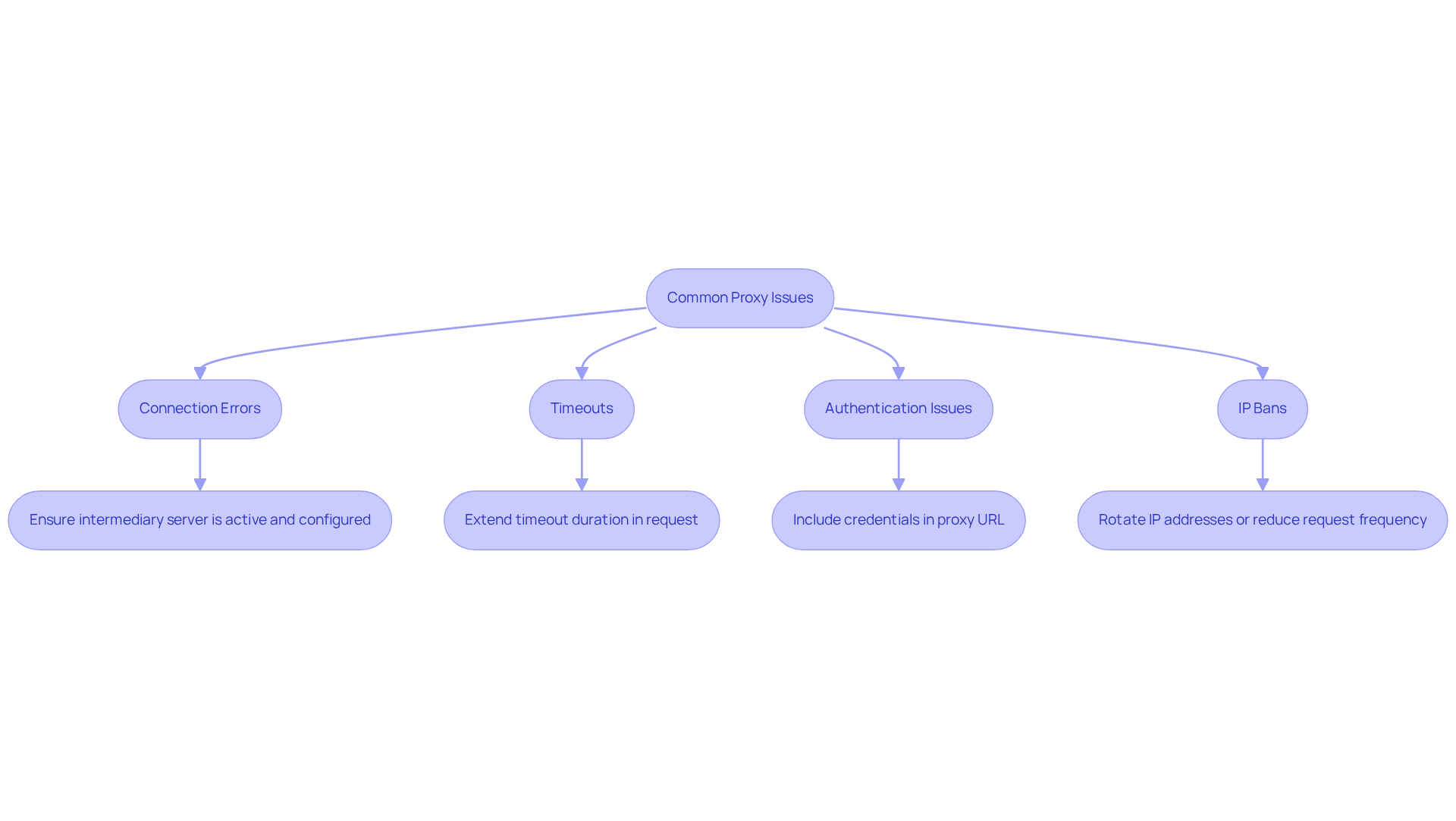

When using proxies, several common issues can arise that may hinder your experience:

-

Connection Errors: Encountering a connection error? First, ensure your intermediary server is active and properly configured. A quick test in your browser can confirm its functionality. Look out for errors like

requests.exceptions.ConnectionError, which signals that the connection couldn't be established. -

Timeouts: Timeouts pose a significant challenge, with data indicating that approximately 30% of intermediary communications face this issue. To combat this, consider extending the timeout duration in your request:

response = requests.get('http://example.com', proxies=proxies, timeout=10)If you see

requests.exceptions.Timeout, it means your request has exceeded the specified timeout duration. -

Authentication Issues: Many intermediaries require authentication. You can easily include your credentials directly in the proxy URL:

proxies = {'http': 'http://username:password@your_proxy_ip:port'} -

IP Bans: Getting blocked? Rotating your IP addresses or reducing the frequency of your requests can help you avoid detection. Developing a strategy for rotating servers is essential for maintaining access to the information you need. Relying on a single server can lead to bans or rate limits.

By recognizing these common issues and implementing the corresponding solutions, you can significantly enhance your experience with proxy with python requests, leading to more reliable data scraping outcomes.

Conclusion

Mastering the use of proxies with Python Requests is not just beneficial; it’s essential for sales leaders who want to elevate their data gathering capabilities. By effectively employing proxies, teams can navigate the complexities of web scraping, ensuring anonymity while accessing crucial market insights. This capability not only shields against detection but also facilitates efficient information collection - vital in today’s competitive landscape.

The article delved into key insights regarding the functionality and implementation of proxies. From grasping the purpose of proxies and their advantages in Python Requests to practical methods for installation and configuration, this guide offers a comprehensive overview. Various strategies, such as static gateways and rotating servers, were highlighted as effective means to minimize risks and maximize data access efficiency. Additionally, common proxy issues and their solutions were addressed, empowering users to troubleshoot effectively and maintain seamless operations.

In an era where data accuracy and timely access to information are paramount, leveraging proxies has become a necessity, not an option. Sales teams must adopt these practices to refine their lead generation strategies and stay ahead of the competition. By embracing the tools and techniques outlined, organizations can enhance their data-driven decision-making processes, ultimately leading to greater success in their sales endeavors. Are you ready to take your data gathering to the next level?

Frequently Asked Questions

What is the purpose of proxies in Python Requests?

Proxies serve as intermediaries between your computer and the internet, allowing you to send requests through different IP addresses, which is essential for web scraping to avoid detection or IP bans.

How do proxies benefit web scraping?

Proxies provide anonymity, help bypass geographical restrictions, and manage rate limits imposed by servers, making them crucial for effective web scraping.

What percentage of sales teams use intermediary providers for information collection as of 2026?

Approximately 39.1% of sales teams utilize intermediary providers for information collection.

How do e-commerce firms use proxies in their operations?

About 85% of e-commerce firms actively monitor competitor pricing and promotions through scraping, using proxies to maintain a discreet presence while gathering market insights.

What do industry leaders say about the importance of intermediaries?

Industry leaders emphasize that intermediaries are essential for ensuring online anonymity and responsible information-gathering practices.

How can mastering the use of intermediaries benefit sales teams?

By mastering intermediaries, sales teams can secure reliable access to essential information, enhancing their strategic decision-making capabilities.

What advanced tools can sales teams use for information discovery?

Sales teams can use Websets' enterprise-grade AI-driven web search solutions, which include custom semantic search and AI responses, to comply with industry standards.

Why is transparent, permission-based access to information increasingly critical in 2026?

As the compliance landscape evolves, the demand for transparent, permission-based access to information grows, reinforcing the role of intermediaries in responsible information collection.