Overview

Mastering web crawling with Python is essential for sales leaders. It automates the collection of valuable market data, enhances lead generation, and enables the analysis of competitor strategies. This article outlines crucial tools and libraries, presents a step-by-step guide for building a web crawler, and emphasizes advanced techniques that improve data quality and ensure compliance with ethical standards. These elements illustrate the significant impact of web crawling on sales efficiency, prompting sales leaders to integrate these practices into their strategies.

Introduction

Mastering the art of web crawling is a transformative strategy for sales leaders navigating the competitive landscape of data-driven decision-making. By leveraging Python, professionals can automate the collection of vital information, from pinpointing potential leads to scrutinizing competitor strategies. However, a pressing challenge persists: how can one effectively establish a web crawler that not only aggregates data but also guarantees its quality and relevance? This tutorial explores the essential tools, techniques, and best practices that will empower sales teams to enhance their lead generation strategies and drive substantial success.

Understand Web Crawling: Concepts and Importance

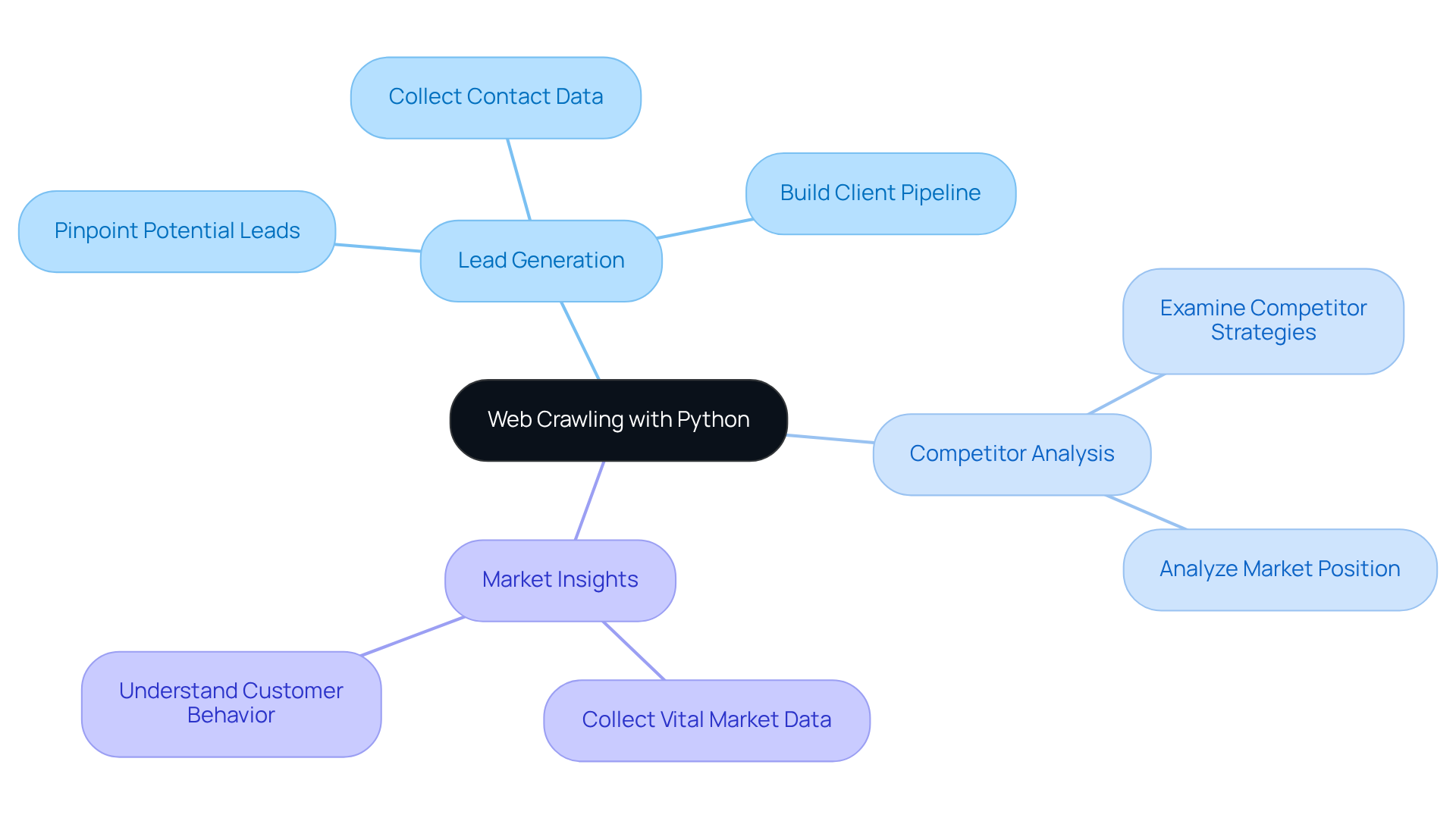

Web crawling with python is the automated process of systematically browsing the internet to gather information from various web pages. For sales leaders, mastering web crawling with python is essential as it enables the extraction of valuable information that can enhance lead generation strategies.

By utilizing web bots, companies can effectively:

- Pinpoint potential leads

- Examine competitor strategies

- Collect vital market insights

This approach not only streamlines but also significantly improves the accuracy of the information gathered. Consequently, sales teams can focus on high-quality leads that are more likely to convert into customers. In an increasingly data-driven landscape, effective web crawling with python can dramatically enhance a sales team's efficiency and success rates in achieving their targets.

Set Up Your Environment: Required Tools and Libraries

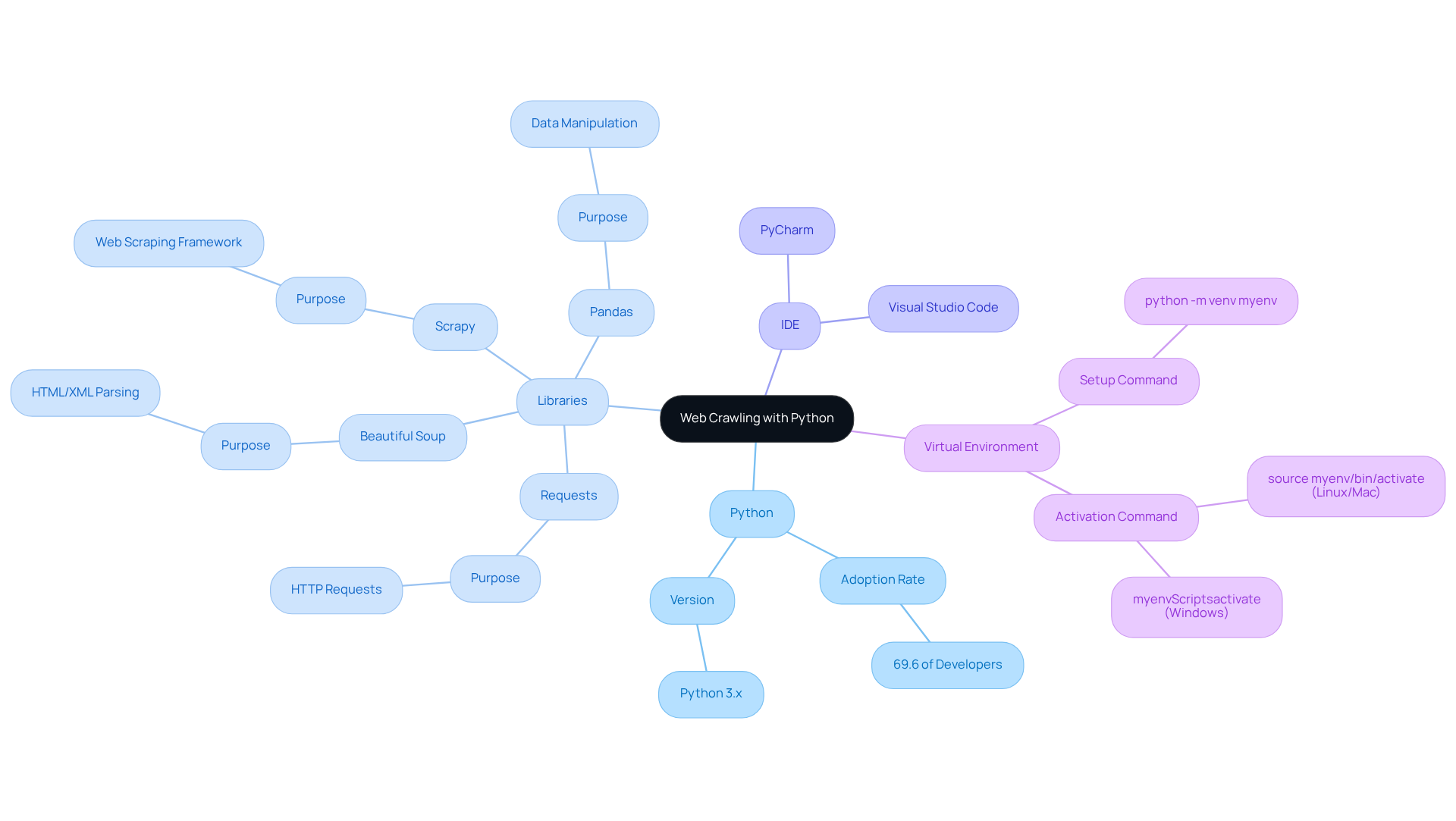

To effectively engage in web crawling with Python, establishing a solid development environment is paramount. Here are the essential tools and libraries you should consider:

-

Python: Ensure you have Python 3.x installed on your machine. This version provides extensive resources and strong community support, making it the preferred choice for . Notably, Python dominates with a 69.6% adoption rate among developers for web scraping, thanks to its rich ecosystem and readability.

-

Libraries: Install the following libraries using pip:

- Requests: This library is essential for making HTTP requests to fetch web pages, simplifying the process of data retrieval.

- Beautiful Soup: Ideal for parsing HTML and XML documents, Beautiful Soup allows for easy navigation and manipulation of the parse tree.

- Scrapy: A powerful framework designed specifically for building web robots and scrapers, Scrapy excels in handling complex tasks and large-scale projects. Case studies highlight Scrapy's effectiveness for projects requiring custom features and flexible pipelines.

- Pandas: This tool is invaluable for data manipulation and analysis, enabling efficient organization and processing of the data you collect.

-

IDE: Utilize an Integrated Development Environment (IDE) such as PyCharm or Visual Studio Code to streamline your coding and debugging processes.

-

Virtual Environment: Setting up a virtual environment is crucial for managing dependencies and avoiding conflicts. Create one using the command

python -m venv myenvand activate it withsource myenv/bin/activate(Linux/Mac) ormyenv\Scripts\activate(Windows).

By ensuring you possess the appropriate tools and resources, you will be well-prepared for web crawling with Python to create an efficient web scraper tailored to your specific requirements in B2B sales. Remember, "Crawling is web scraping with exploration capability," emphasizing the importance of understanding the tools you are utilizing.

Build Your First Web Crawler: Step-by-Step Guide

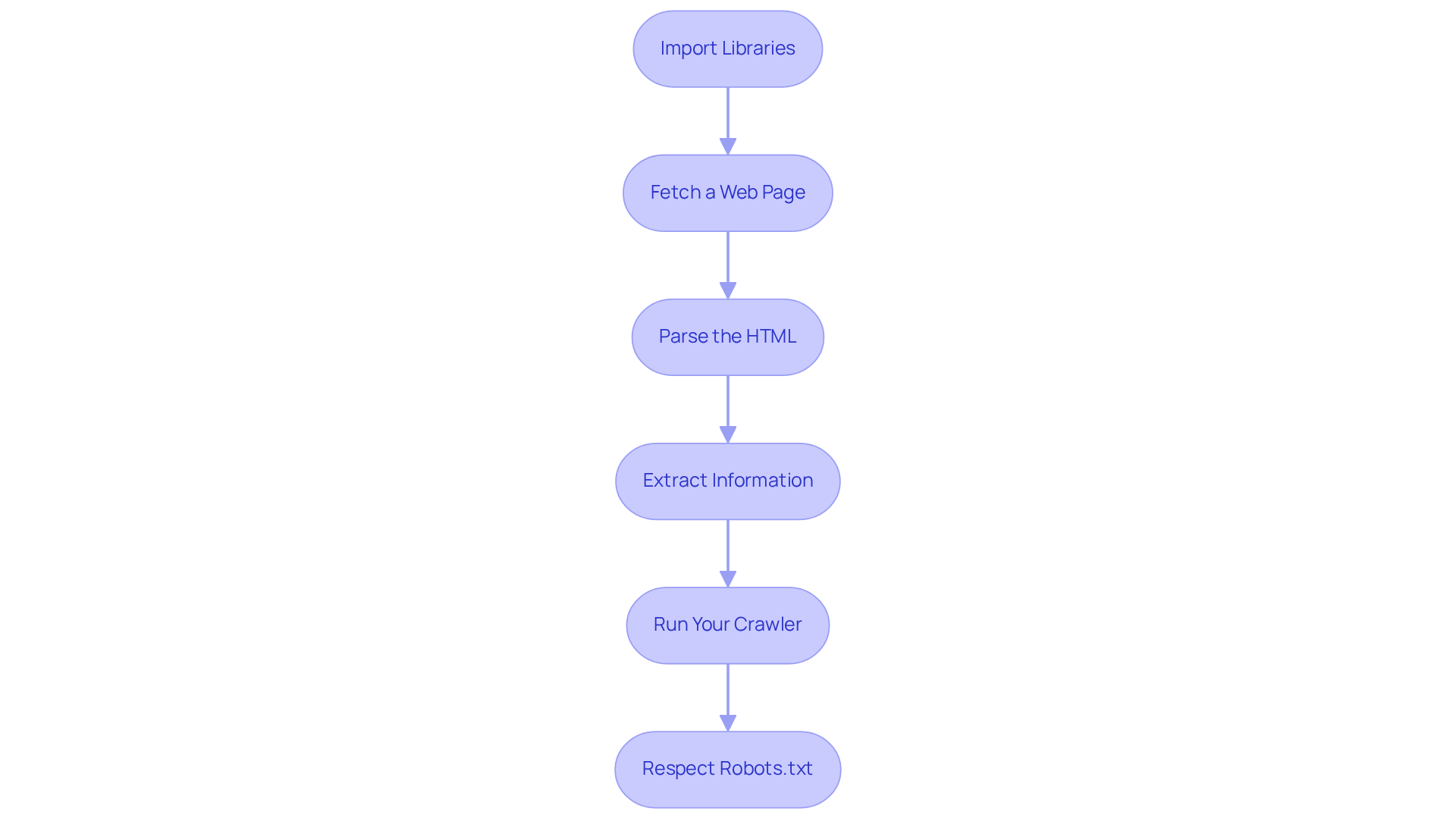

With your environment now set up, it's time to start by constructing your first web crawler. Follow these essential steps:

-

Import Libraries: Begin by importing the necessary libraries in your Python script:

import requests from bs4 import BeautifulSoup -

Fetch a Web Page: Leverage the Requests library to fetch a web page. For instance:

url = 'https://example.com' response = requests.get(url) -

Parse the HTML: Utilize Beautiful Soup to parse the HTML content:

soup = BeautifulSoup(response.text, 'html.parser') -

Extract Information: Determine the information you wish to extract. If you're aiming to gather all the links, for example:

links = soup.find_all('a') for link in links: print(link.get('href')) -

Run Your Crawler: Execute your script to observe the extracted information. Ensure you handle exceptions and errors with care.

-

Respect Robots.txt: Prior to crawling a website, consult its

robots.txtfile to confirm that you are permitted to scrape the content.

By adhering to these steps, you will establish a foundational web scraper for web crawling with Python, capable of retrieving and extracting information from web pages. This sets the stage for more advanced functionalities.

Extract and Parse Data: Advanced Techniques and Best Practices

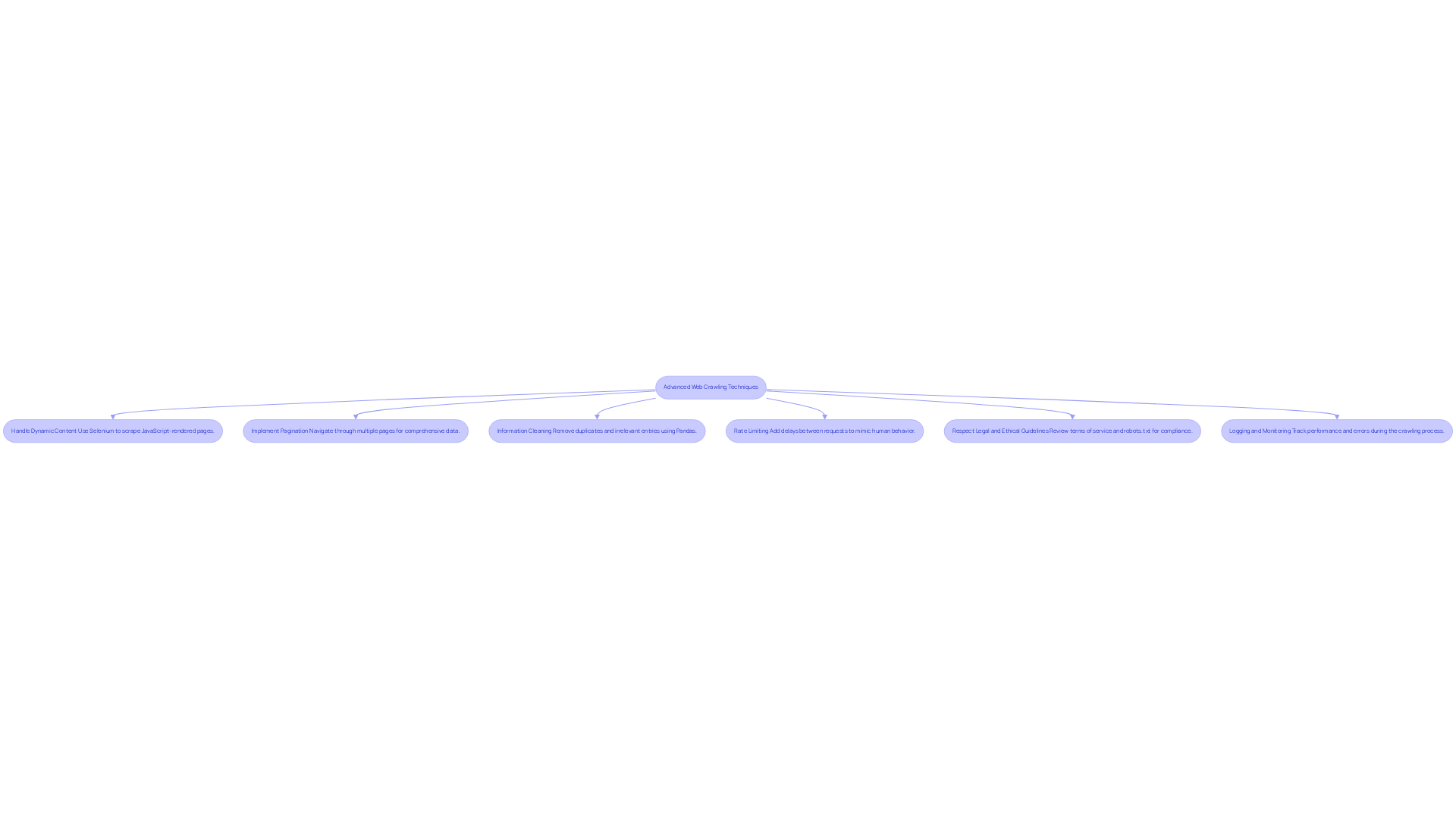

To elevate the capabilities of your web crawler, consider implementing these advanced techniques and best practices:

- Handle Dynamic Content: Many websites utilize JavaScript for dynamic content loading. Engage with these pages efficiently using libraries such as Selenium to obtain the required information. Be mindful of common challenges, including timeouts and session management, which can complicate this process.

- Implement Pagination: When your information spans multiple pages, ensure your crawler can navigate through all of them by implementing pagination. This allows for comprehensive data collection.

- Information Cleaning: After extraction, cleansing your information to remove duplicates and irrelevant entries is essential. Utilizing Pandas for can streamline this process, significantly enhancing quality. Poor information quality can result in losses estimated at $3.1 trillion each year in the U.S., underscoring the significance of efficient cleansing. As Josh Wills observes, 'High-quality information is essential for making informed decisions.'

- Rate Limiting: To prevent overwhelming servers, incorporate rate limiting in your web scraper. This involves adding delays between requests, simulating human browsing behavior, and reducing the risk of being blocked.

- Respect Legal and Ethical Guidelines: Always review the website's terms of service and robots.txt file to ensure adherence to their usage policies. Ethical scraping not only safeguards your business but also fosters positive relationships with website owners.

- Logging and Monitoring: Implement robust logging to track your web scraping tool's performance and monitor for errors or issues that may arise during the crawling process. This practice is essential for preserving the integrity of your information collection efforts, allowing you to address any problems promptly.

By integrating these advanced techniques, you can significantly enhance the efficiency and effectiveness of web crawling with Python, ensuring the acquisition of high-quality data that supports your sales strategies.

Conclusion

Mastering web crawling with Python equips sales leaders with a formidable tool for enhancing lead generation and market analysis. By automating data collection, businesses can efficiently gather insights that inform strategic decisions, ultimately resulting in higher conversion rates and improved sales performance.

This article outlines essential concepts of web crawling, the necessary tools and libraries, and a step-by-step guide to building a web crawler. Key arguments emphasize the importance of utilizing Python due to its extensive community support and the effectiveness of libraries like Requests, Beautiful Soup, and Scrapy. Furthermore, advanced techniques such as handling dynamic content, implementing pagination, and adhering to legal guidelines are crucial for optimizing the data extraction process.

In today's data-driven marketplace, the ability to harness web crawling effectively can provide a significant competitive advantage. Sales leaders are encouraged to embrace these techniques and best practices, ensuring their teams can leverage high-quality data to drive informed decisions and achieve business objectives. The journey into web crawling with Python not only enhances operational efficiency but also empowers sales strategies to thrive in an increasingly digital landscape.

Frequently Asked Questions

What is web crawling with Python?

Web crawling with Python is the automated process of systematically browsing the internet to gather information from various web pages.

Why is web crawling important for sales leaders?

Mastering web crawling with Python is essential for sales leaders as it enables the extraction of valuable information that can enhance lead generation strategies.

How can companies benefit from using web bots in web crawling?

Companies can benefit from using web bots by pinpointing potential leads, examining competitor strategies, and collecting vital market insights.

What are the advantages of web crawling for sales teams?

Web crawling streamlines data collection, improves the accuracy of the information gathered, and allows sales teams to focus on high-quality leads that are more likely to convert into customers.

How does web crawling impact sales team efficiency?

Effective web crawling with Python can dramatically enhance a sales team's efficiency and success rates in achieving their targets in an increasingly data-driven landscape.