Overview

Mastering web scraping with Python requires a solid grasp of web data extraction fundamentals, the establishment of an appropriate environment, and the implementation of systematic scraping techniques—all while adhering to ethical guidelines. This article delineates essential concepts such as:

- HTML structure

- HTTP requests

It provides comprehensive steps for setting up a Python environment and underscores the critical importance of respecting legal and ethical standards. Such adherence ensures responsible data collection practices that not only enhance your skills but also safeguard your integrity in the field.

Introduction

Web scraping has emerged as a vital tool for businesses seeking to harness the power of data. Projections indicate that by 2025, 60% of companies will rely on this technique for strategic insights. This article delves into the essential techniques and setup required to master web scraping using Python, offering a comprehensive guide to extracting valuable information from the web. However, as the demand for data grows, so do the challenges associated with ethical practices and compliance. How can one effectively navigate these complexities while maximizing the benefits of web scraping?

Understand Web Scraping Fundamentals

Web harvesting is an from websites, a practice that businesses increasingly adopt for data extraction. In 2025, approximately 60% of companies will employ web data extraction to gather insights and enhance decision-making. This process involves sending requests to a web server, obtaining the webpage content, and analyzing it to extract the desired information. Understanding the layout of web pages, particularly markup language and CSS, is crucial for efficient scraping. Key concepts include:

- HTML Structure: Familiarize yourself with HTML tags and attributes, as they constitute the backbone of a webpage's structure. A strong understanding of markup language is essential for recognizing and obtaining pertinent information. Industry leaders assert that 'a profound comprehension of HTML and CSS is essential for successful web scraping' to ensure precise information extraction.

- HTTP Requests: Gain insights into GET and POST requests, which are vital for interacting with web servers and obtaining information.

- Formats: Understand the various types in which information can be extracted, such as JSON, XML, or plain text, to ensure compatibility with your applications.

Companies utilizing web extraction include e-commerce platforms that track competitor pricing, financial institutions analyzing market trends, and marketing agencies gathering information for sentiment analysis. However, web extraction presents challenges, such as managing AJAX calls, timed sessions, and navigating anti-bot measures. Ethical considerations are paramount; respecting terms of service and copyright laws is essential for sustainable data collection.

By mastering these fundamentals, you will be well-prepared to explore the practical aspects of web scraping with Python, allowing you to leverage this powerful tool for data-driven insights.

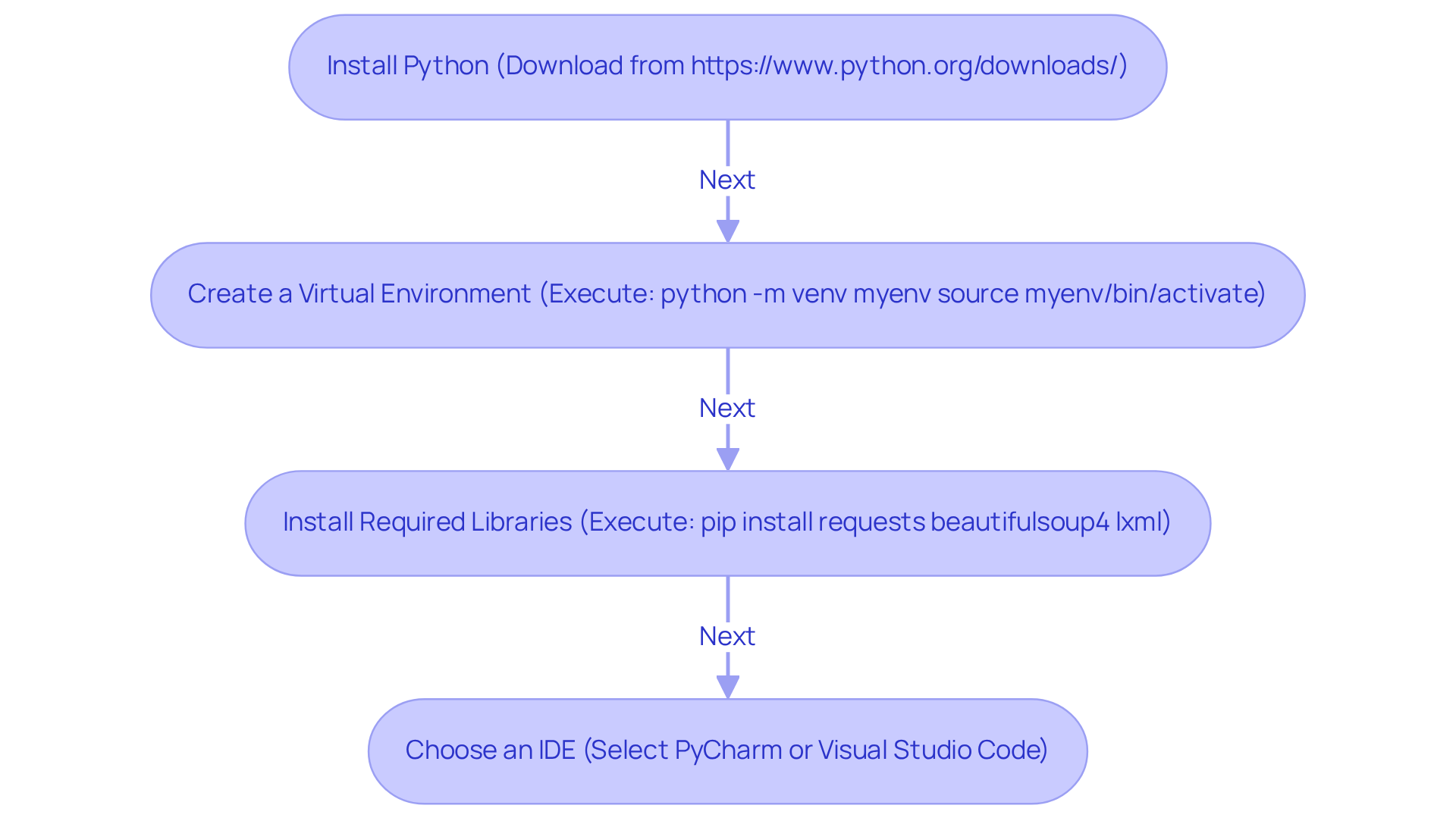

Set Up Your Python Environment for Web Scraping

To establish a Python environment tailored for web scraping, follow these essential steps:

-

Install Python: Begin by downloading and installing the latest version of Python from the official website (https://www.python.org/downloads/).

-

Create a Virtual Environment: This step is crucial for managing project dependencies. Execute the following commands:

python -m venv myenv source myenv/bin/activate # On Windows use `myenv\Scripts\activate` -

Install Required Libraries: Utilize pip to install essential libraries that facilitate web scraping:

pip install requests beautifulsoup4 lxml -

Choose an IDE: Opt for an Integrated Development Environment (IDE) such as PyCharm or Visual Studio Code to write your scripts efficiently.

By following these steps, you will create a robust environment optimized for effective web extraction tasks. Notably, web scraping with Python remains the preferred option for web data extraction, with over 80% of leading online retailers utilizing it to track competitor information daily. Developers emphasize that using virtual environments is vital for maintaining clean and manageable project dependencies, ensuring smoother development processes. As one developer insightfully noted, "A good data collection strategy isn’t just about gathering information—it’s about executing it in a manner that’s sustainable, adaptable, and resilient against the unavoidable challenges the internet presents." Additionally, consider employing proxies to avoid detection and blocking during your data collection activities.

Implement Web Scraping Techniques with Python

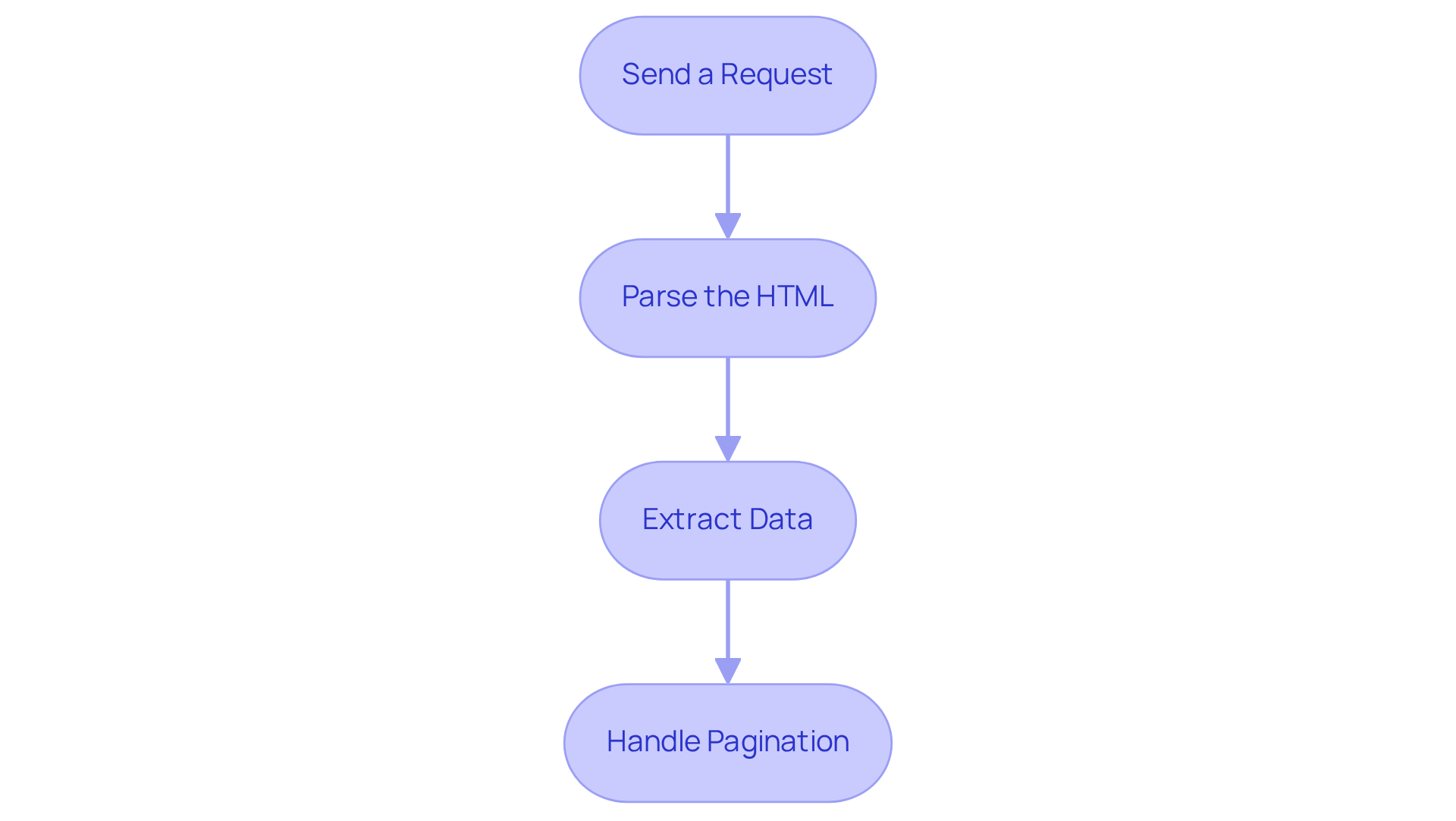

The process of involves systematic techniques that can yield valuable data insights.

-

Send a Request: Begin by utilizing the

requestslibrary to fetch the webpage content. Here’s how:import requests url = 'https://example.com' response = requests.get(url)This step is crucial, as it establishes the foundation for your scraping efforts.

-

Parse the HTML: Next, employ

BeautifulSoupto parse the HTML content effectively:from bs4 import BeautifulSoup soup = BeautifulSoup(response.text, 'lxml')Parsing the HTML allows you to navigate the document structure with ease.

-

Extract Data: Identify the specific HTML elements containing the data you require and extract it:

titles = soup.find_all('h2') # Example for extracting all h2 tags for title in titles: print(title.text)This step is where you begin to gather the insights you need.

-

Handle Pagination: If the information spans multiple pages, implement logic to navigate through them and extract content accordingly. This ensures that you capture all relevant data.

By mastering web scraping with Python, you can efficiently gather data from various websites, thereby enhancing your data analysis capabilities and decision-making processes.

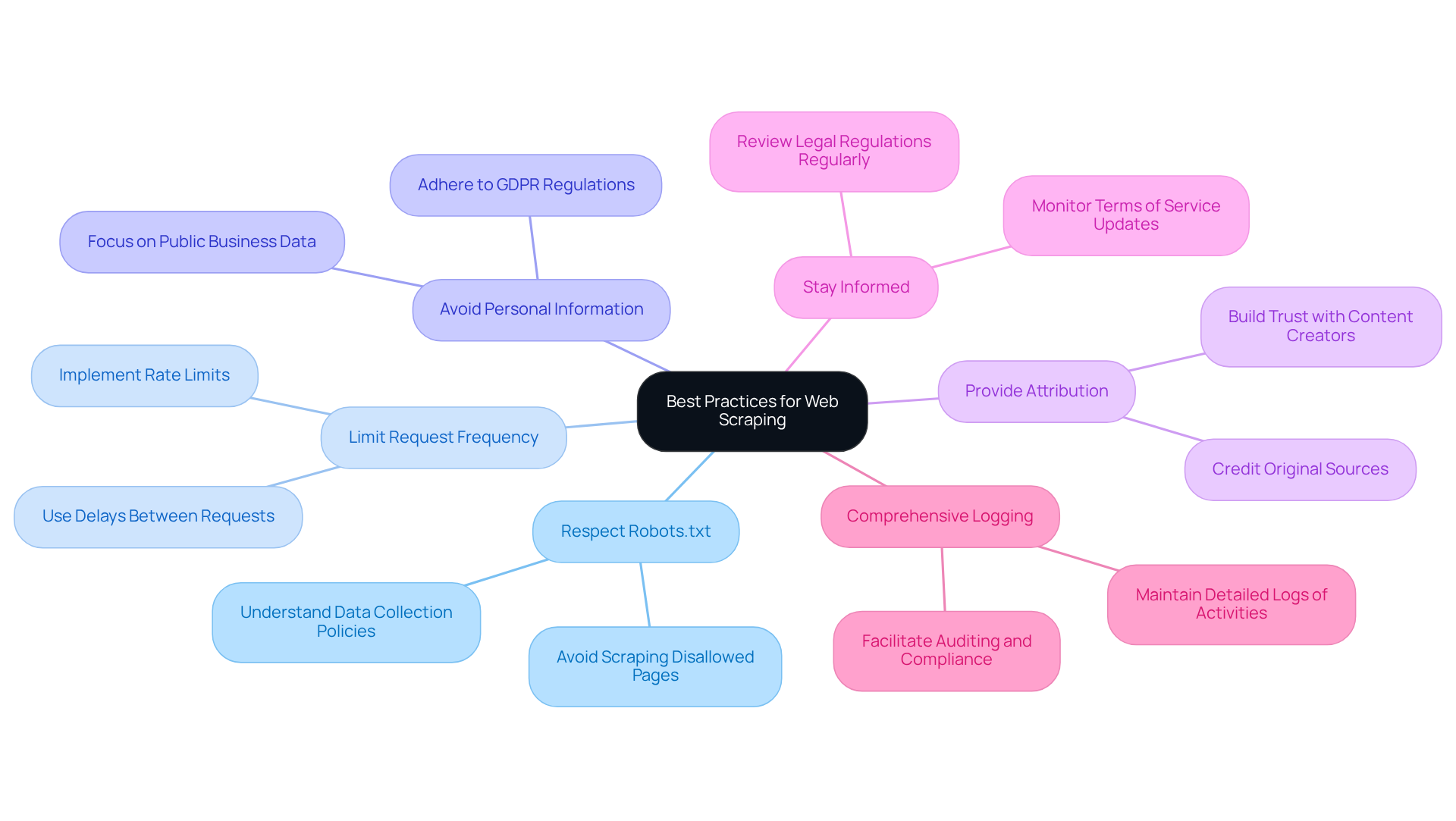

Adhere to Best Practices and Ethical Guidelines

When engaging in web scraping, it is crucial to adhere to best practices and . This not only ensures compliance but also fosters positive relationships with website owners.

- Respect Robots.txt: Always check the

robots.txtfile of a website to understand its data collection policies. This file indicates which areas of the site are restricted for crawlers, ensuring that your data collection activities align with the site's preferences. - Limit Request Frequency: To avoid overwhelming servers, implement rate limits and delays between requests. Excessive scraping can lead to IP bans or legal actions. Companies have reported significant costs associated with copyright violations from illegal scraping, which can reach up to $150,000 per work (source: legal studies on copyright violations).

- Avoid Personal Information: Scraping personal information without consent can lead to serious legal consequences. Ethical scrapers should concentrate on gathering only public business information, as the General Data Protection Regulation (GDPR) and other privacy laws impose strict requirements on managing personal details. It is crucial to guarantee adherence to GDPR when handling any personal information.

- Provide Attribution: If you publish scraped data, give credit to the original source. This practice not only maintains ethical standards but also builds trust with content creators and website owners.

- Stay Informed: Regularly review legal regulations regarding web data extraction in your jurisdiction to ensure compliance. Legal experts emphasize the importance of understanding the implications of Terms of Service (TOS) violations, which can lead to being blocked or flagged by the site. For example, the HiQ Labs case demonstrates the possible outcomes of TOS violations in web data extraction.

- Comprehensive Logging: Maintain detailed logs of your data collection activities for responsible data stewardship. This practice helps in auditing and ensuring compliance with ethical standards.

By following these guidelines, you can conduct web scraping effectively while respecting the rights of website owners and minimizing legal risks.

Conclusion

Mastering web scraping with Python not only empowers individuals but also organizations to extract valuable data efficiently and ethically. This tutorial has provided a comprehensive overview of essential techniques and setups required to navigate the complexities of web scraping, ensuring users are equipped with the necessary skills to harness this powerful tool.

Key points discussed include the fundamentals of web scraping, such as:

- Understanding HTML structure

- HTTP requests

- The importance of ethical practices

The article outlined the steps to set up a Python environment tailored for web scraping, including:

- Installing necessary libraries

- Creating a virtual environment

Practical techniques for implementing web scraping were covered, emphasizing the significance of:

- Parsing HTML

- Handling pagination effectively

As the demand for data-driven insights continues to grow, embracing the principles of ethical web scraping becomes increasingly significant. By adhering to best practices, respecting website owners' rights, and staying informed about legal regulations, individuals can conduct web scraping responsibly. This approach not only enhances data collection efforts but also fosters trust and collaboration within the digital ecosystem. Engaging in web scraping with a focus on sustainability and compliance will undoubtedly lead to more fruitful and lasting outcomes.

Frequently Asked Questions

What is web scraping?

Web scraping, also known as web harvesting, is an automated process for extracting data from websites, increasingly adopted by businesses for data extraction.

What percentage of companies are expected to use web data extraction by 2025?

Approximately 60% of companies are expected to employ web data extraction to gather insights and enhance decision-making by 2025.

What are the key components involved in the web scraping process?

The key components include sending requests to a web server, obtaining the webpage content, and analyzing it to extract the desired information.

Why is understanding HTML and CSS important for web scraping?

A strong understanding of HTML tags and attributes, as well as CSS, is crucial for recognizing and obtaining pertinent information from web pages.

What types of HTTP requests should one be familiar with for web scraping?

It is important to understand GET and POST requests, which are vital for interacting with web servers and obtaining information.

What formats can information be extracted in during web scraping?

Information can be extracted in various formats, including JSON, XML, or plain text, to ensure compatibility with applications.

Which types of companies utilize web extraction?

Companies such as e-commerce platforms tracking competitor pricing, financial institutions analyzing market trends, and marketing agencies gathering information for sentiment analysis utilize web extraction.

What challenges are associated with web extraction?

Challenges include managing AJAX calls, timed sessions, and navigating anti-bot measures.

What ethical considerations should be taken into account when web scraping?

It is essential to respect terms of service and copyright laws to ensure sustainable data collection.

How can mastering web scraping fundamentals benefit individuals?

Mastering these fundamentals prepares individuals to explore the practical aspects of web scraping with Python, enabling them to leverage this tool for data-driven insights.